Would this solve this issue of unencrypted data being stored on nodes though?

It doesn’t solve the issue of a client sending chunks that are not encrypted, so that remains.

It also isn’t consistent in the sense that the self encryption API behaves differently depending on the size of the data presented to it. That’s going to catch developers out and lead them down blind alleys until they understand this (unless they stick to the higher level file based API).

Also, this may not be limited to small files - will it also be a problem when storing a small data map corresponding to larger files, or is that covered? @joshuef

Again feels inelegant, confusing, and doesn’t stop nodes being sent dodgy data.

It would. Either you are low level and we prevent sending unencrypted data (error).

OR

You are high level, and all data is then encrypted. ![]()

That’s sorted via nodes encrypting data.

Well… It would be consistent in that it encrypts it or errors out. Too small == error. Why too small is in the weeds of how self encryption works. But if it can’t work, that layer should error… (not just pass through).

Well the data map is not stored by default now. So that’s fine.

Or you choose to make it public (and published), so also fine?

I didn’t see nodes encrypting data mentioned, so if they do that’s covered with the downside that you can’t restore after a reboot/crash. But that’s not seen as much of an issue given we’re back to small nodes.

I get your logic and agree that self encryption should not permit storage of data that won’t be encrypted. The issue I mention remains, and it is going to confuse.

I guess it comes down to how many use self encryption directly. Prominent warnings in the API docs will help, explaining that if you want a simple robust storage API, use the filesystem API, and if you want to store data directly, exactly what the means in practice - that you can store blocks above 3KB (?) only, and that will have to do your own handling of data maps to retrieve and reconstruct the original. That’s a pretty ugly option IMO, but ![]()

Sorry was following on from this:

Yeh, I know what you mean. But at least it’ll confuse into a safe error and not unintentionally unencrypted data.

Perhaps encrypt in safe down the line can be improved to self_encrypt OR encrypt with some key by default or what have you. That starts to get niggly in API surface though, and I think with the higher levels coming in, that’s ok for now?

Aye, I agree.

I think in effect the register will be encrypting via some provided PK (which only for now defaults to a wallet key… if you’re transient, or an app etc, this may be more complex and we end up at the same issue w/r/t encryption.)

Sooo We’ll have to tidy that up eventually and get to a good flow there in general.

(I guess we could do the same for encrypt apis on data/chunks ![]() , just not sure how the client key plays out in all this, can we rely on that?? if it’s not easily portable does it get us anything?)

, just not sure how the client key plays out in all this, can we rely on that?? if it’s not easily portable does it get us anything?)

I think myself and JPL were confused because your simplest solution reply looked like it meant only doing that, not node encryption.

Now I see you mention padding which also appeared to have been dropped so I’m confused what the final approach is in total.

Can you put it all together in one reply because it’s not clear across the discussion.

Here we go (from me anyway)

- Nodes encrypt to store on disk and decrypt to reply to Get

File based API

- Registers have an owner public key

- Register entries are 32Byte

- Entries are encrypted by default (via client API) with the register owner key (the client can derive the secret key for that if he is the owner)

– Any data is through self encryption - Data less than 3K is held in teh data map

- Data map for each file is in a chunk of it’s own and the chunk encrypted by the register owners key

- Register becomes a directory and can point to other directories or files, just like a hdd

So the chunk the entry points to holds the data map and metadata (filename for now) OR another link to another register (sub dir).

Users can traverse a directory structure that’s much larger than their machine and so on. So all that lives in SAFE and encrypted by default

- Client Gets Entry, decrypts that and then gets teh chunk it points to

Iff an app overrides the encryption (low level API) then it will be obvious that choice is made. Devs may do this t publish directories and files.

KV API

Very much as above, but the chunks that hold the data map and metadata will hold whatever the dev wishes. Encrypted by default and can be not encrypted via low level API.

That would be a big downside with bad consequences for network and node operators. Every glitch in power grid means downloading all the data again instead just one minute reboot. Buying UPS is a big cost for home user and aiming for superlong uptime will mean unsecured/unupdated machines. All together it would be complication for node operators and lot of unnecessary data transfers in the network.

Small nodes protect help the network in this case by spreading the load, but the load would be still there and strain on the node operators internet connection would be same (if he runs more small nodes instead one big).

- Nodes encrypt data themselves

sn_clienterrors if data cannot be encrypted (ie no surprise public data!)sn_cliwrapssn_clientandself encryptionlayers to ensure all data is encrypted in some fashion via encryptedregisters (ie, normal users get all data encrypted via CLI).

Probably:

- Clients can safely assume and fallback on a key for

registerandsmall chunkencryption (iue @dirvine’s

File Based API above)

Not:

Any padding needed.

My bad, I misread your quote mention of padding to mean it was in - padding is out!

I think together my summary plus David’s and yours are consistent now. ![]()

Is it though?

Average Joe may run a handful, if that, and will likely be turning those on and off constantly anyway.

Any node operator that goes in with any effort will be either cloud or be happy to get a ups at home.

You think otherwise?

I agree, but so would jeopardy from hosting ‘bad’ data. Which is more important, random ordinary person feeling safe from inadvertently hosting nasty files and protection for the network from reputational damage, or more efficient recovery of nodes from an outage?

EDIT: N.B. removing the ability to restart efficiently (using existing stored records) also shifts some of the balance between ordinary noderunners towards those running high reliability nodes (e.g. data-centres and those with UPS) arguably increasing centralisation (h/t @loziniak).

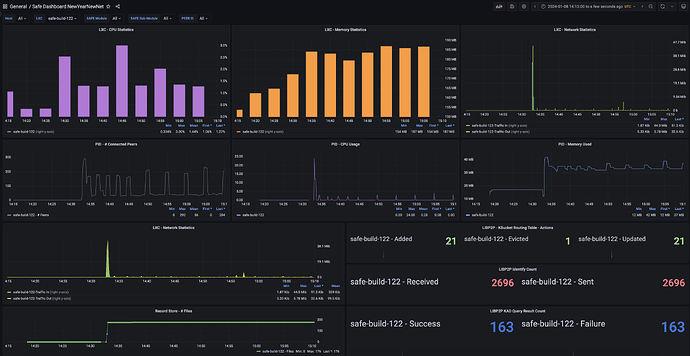

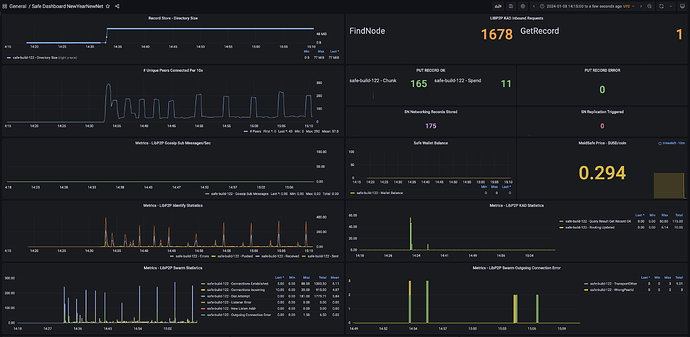

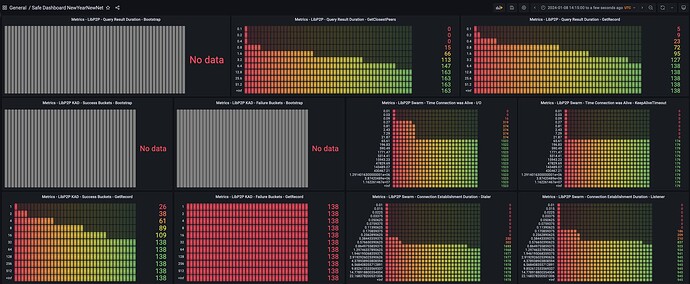

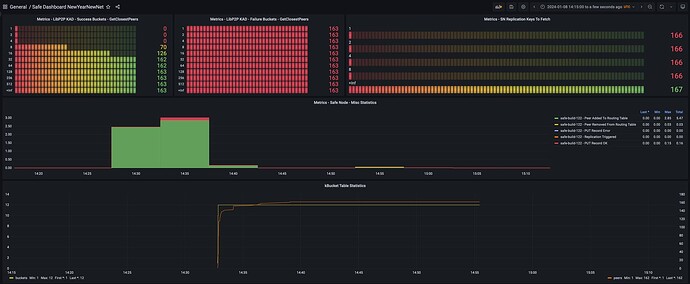

Just started up my node a hour ago or so, I know @storage_guy referred to some oscillations every 20 seconds in the ZeroTestNet in terms of network activity as noted here: ZeroTestNet [20/12/23 Testnet] [Offline] - #140 by storage_guy. I don’t see the oscillations every 20 seconds, but do see network activity rising with # of connected peers every 5 minutes, then falling back to baselines (see below for more details).

Observations:

- CPU & Memory look super healthy, as others have already pointed out.

- Just noting I see the # connected peers oscillate every 5 minutes or so, with +150 peers being connected then eventually disconnecting. This is at different frequency then when chunks are being stored at etc. These oscillations every 5 minutes seem to correlate with LIBP2P Identify sent actions activity. I just wanted to confirm if this is all expected and as per design?

Extra Enhancements:

- Price of Maidsafe coin (eMAID) is now being captured every 10 mins,

Note: I am not sure yet how to compute the nano values in the wallet balance to USD yet, as I haven’t received any new coins yet, but will follow-up on this as well.

Wow, testnet token getting some real value, do we have a first real economy test here perhaps? ![]()

I am not sure, it somehow feels wrong. I may be overestimating how much additional overhead it would bring to the network.

For average Joe, he already has internet connection and if he has some less than 10 years old computer, he can now probably run 100+ nodes with $0 investment. UPS means spending money which can discourage people from becoming node operators.

My question here is, do we need 100% cryptographic security to feel safe? Bad actor would need to have hacked client, pay for the data and will be unable to target node where the file will be stored. What is the gain in that?

I am not a lawyer, wouldn’t be some simple check if the chunk looks encrypted enough for plausible deniability?

What is the legal concern images?

If so can someone post a image that would not be encrypted/chunked please?

Personally identifiable information, emails, addresses, phone numbers - all covered by data protection legislation in Europe, UK.

Ahh I can see clearly now. Thanks!

anyone else struggling to send test net coins ?

just had this

ubuntu@s5:~$ safe wallet send 199 868f722a3e1d37338c8442adad96170cbe970b10178917e1e7955130815410e5c778e7605ca4d1ae773c8ec7e0dfcb1f

Logging to directory: "/home/ubuntu/.local/share/safe/client/logs/log_2024-01-08_17-11-33"

Built with git version: ba2bb2b / main / ba2bb2b

Instantiating a SAFE client...

Trying to fetch the bootstrap peers from https://sn-testnet.s3.eu-west-2.amazonaws.com/network-contacts

Connecting to the network with 47 peers:

🔗 Connected to the Network Failed to send NanoTokens(199000000000) to 868f722a3e1d37338c8442adad96170cbe970b10178917e1e7955130815410e5c778e7605ca4d1ae773c8ec7e0dfcb 1f due to Transfers(CouldNotSendMoney("The transfer was not successfully registered in the network: CouldNotSendMoney(\"Network Error Get Record Query Error NotEnoughCopies { record_key: 853625(bd870ce4701c64cbadf999da9db1dfaed2bc85a79b8253eaac60cd576df6e6be), expected: 5, g ot: 3 }.\")")).

Error:

0: Transfer Error Failed to send tokens due to The transfer was not successfully registered in the network: CouldNotSendMoney("Network Error GetRecord Query Error NotEnoughCopies { record_key: 853625(bd870ce4701c64cbadf999da9db1dfaed2bc85a79b8253eaac60cd576df6e6be), expe cted: 5, got: 3 }.").

1: Failed to send tokens due to The transfer was not successfully registered in the network: CouldNotSendMoney("Network Error GetRecor d Query Error NotEnoughCopies { record_key: 853625(bd870ce4701c64cbadf999da9db1dfaed2bc85a79b8253eaac60cd576df6e6be), expected: 5, got: 3 }.")

Location:

/rustc/82e1608dfa6e0b5569232559e3d385fea5a93112/library/core/src/convert/mod.rs:757

Backtrace omitted. Run with RUST_BACKTRACE=1 environment variable to display it.

Run with RUST_BACKTRACE=full to include source snippets.

This isn’t the risk I’m talking about. If someone can upload data which ends up on random people’s nodes unencrypted that creates difficulties for the people running nodes.

This means having unencrypted dodgy things and possibly illegal data stored on their computers. That both discourages participation and can be used to cause reputational damage to Safe Network (“Hey, people are finding XYZ illegal/dodgy data on their nodes”). Those risks disappear if all the data on the node is encrypted.