Of course you are right, I originally connected using the command to connect to the network first, whereas now I just typed in the command to start the node to trigger the error and show it to @Chriso.

The important thing is that the error has been discovered and corrected. ![]()

![]()

Are nodes deleting chunks now?

9am today

+++++++++++++++++++++++++++++++++++++

Total size of all files in .local/share/safe/node/12D3KooWEwMPj64C1MXEKaCYHGgtMApNzzvoynfprLJRLfgfGo7G/record_store/

+++++++++++++++++++++++++++++++++++++

734178794

++++++++++++++++++++++++++++++++++++

Number of files in .local/share/safe/node/12D3KooWEwMPj64C1MXEKaCYHGgtMApNzzvoynfprLJRLfgfGo7G/record_store/

++++++++++++++++++++++++++++++++++++

1864

10.30 am

+++++++++++++++++++++++++++++++++++++

Total size of all files in .local/share/safe/node/12D3KooWEwMPj64C1MXEKaCYHGgtMApNzzvoynfprLJRLfgfGo7G/record_store/

+++++++++++++++++++++++++++++++++++++

60186132

++++++++++++++++++++++++++++++++++++

Number of files in .local/share/safe/node/12D3KooWEwMPj64C1MXEKaCYHGgtMApNzzvoynfprLJRLfgfGo7G/record_store/

++++++++++++++++++++++++++++++++++++

166

They’d prune records if you got to 2048 records. Which doesn’t look so wild given you had 1864 already there.

OK - and the pruned records are automatically replicated to other nearby nodes?

Record replication should already have been done passively as any churn happened.

You can check logs for A pruning reduced number of record in hold from {num_records} to {new_num_records}

Records or Chunks, where are we here ![]()

Have Chunks officially gone to join the Farmers? ![]()

Maybe you’ll find records are any type of data record. Chunks are specific to files uploading. Files chuncks. But appendable data is not chunks

NODE UPDATE:

Overnight for my node, vdash shows a lot of PUTS but memory remains fluctuating around 50MB so I am seeing improvement over the last test network ![]()

basically as @neo says, chunks are made from files part of what we store in records.

A record is the kad data structure that is replicated across nodes.

A record can be:

- chunk

- register

- dbc

How is the XOR address determined for a register and other non-chunks

excellent.

in terms of “node survival”, we’re down to ~1500 which is I think a wee bit better than before. Not the wild improvement I’d been hoping for, but improvements nonetheless.

Kad has its own NetworkAddress struct. The name we feed into that for registers is (at the moment) determined by the client.

There’s some security improvements if we remove that capability, but we’d also be losing other functionality, so TBD on what we do there.

My node:

810 Records

299 MB

safenode is using 46MB of RAM

Similar, a hundred more PUTS and memory now also 46MB. ![]()

16 nodes running from an AWS t3micro

avg mem per node is 36MB

just killed two largest nodes cos / was at 99% of 8Gb

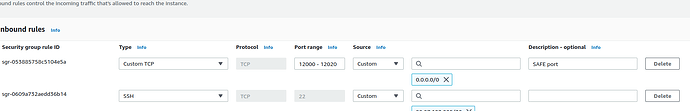

I kept getting told I was behind a NAT (on AWS!!!) until I made a security group rule to allow ports 12000-12020

then started each node like

ubuntu@ip-172-31-6-177:~/.local/share/safe/node$ SN_LOG=all safenode --log-output-dest data-dir --port=12014 &

and just change the port for each node instance.

how does that 36MB compare to you holdings?

rock64@one:~/.local/share/safe/node/12D3KooWCjQTTLy1no2RxTX6CkJZdZdmUpHqoBgNH5rKthooJdH5$ ls -1 record_store | wc -l

1060

rock64@one:~/.local/share/safe/node/12D3KooWCjQTTLy1no2RxTX6CkJZdZdmUpHqoBgNH5rKthooJdH5$ du -sh record_store

323M record_store

rock64@one:~/.local/share/safe/node/12D3KooWCjQTTLy1no2RxTX6CkJZdZdmUpHqoBgNH5rKthooJdH5$ ps -o rss= -p $(pgrep safenode)

48016

Mine has risen by ~3/4MB with more records, now at 46.91MB

ubuntu@ip-172-31-6-177:~/.local/share/safe/node$ du -sh */record_store

106M 12D3KooWAya3P33jS9QqtE5w7sBHaq5hU9nuuH6SKxXpeAvvYk5q/record_store

31M 12D3KooWCRQgoxsy3zBfWGxCh4SuhWNvrQRRD5s9XfQaeUdcJ8Ug/record_store

707M 12D3KooWD2sAYbfH35U66657AWHQmxtGV44MBV6ey1MPvZ812VyL/record_store

99M 12D3KooWHJGM6YEG8r2GgjxS9pVCabRVzpXEy9kH4wYNAKGqosix/record_store

4.0K 12D3KooWHhcGAuvoqPcEyVpDMAS1JYKbb1Fe6eGvKS6GNzGviQTa/record_store

23M 12D3KooWKG3ywERZFGBzC3MmXorFLdETdawdS8CVSerz9C9oyEfD/record_store

344M 12D3KooWKRCCKDCjMF95cWWDZK7YBSWEF54gsrQHoySUM4QxGpxe/record_store

89M 12D3KooWKiyLPSyrCn7QRtgqohgedZJkQtumJrbWfSAhVcz7zzLU/record_store

83M 12D3KooWKngcYQCCpeinAX5UjHUBRLhdqxz591ZQYBUEqLEvSJQx/record_store

122M 12D3KooWMjc471FMckTTchcne8U4hd2fKqYmmiodQUUfdxYsdx9a/record_store

374M 12D3KooWNF5wTH8KzFfcyTy68LpyBQd8HGNnBrz4WPRayGFeWi59/record_store

101M 12D3KooWPMJGL53akrEUTEyZxGMXXJm5oyzR7gCHr1zUzgCMVNrT/record_store

136M 12D3KooWPTqgrhKHyYUvPfztrFGqLw44sThUmKfKsEbwGi1idU5S/record_store

4.0K 12D3KooWPsWkKy5smBMDUQLnPeZPSbnxyAviwguSMwgdGPuCuzw4/record_store

177M 12D3KooWRTf9x3Ajp4WiHx7UUjGNA7EzCmezdAiuvEKndKCP1Ddp/record_store

27M 12D3KooWRqNyzJLqxi8FrnDccwsUt7FGSj3SJ6Gjj8LLr3Vn4DHP/record_store

325M 12D3KooWSJJpDDPPFs6iMxndy3gcRyjBzbXuWL1qCR5tGpq6qnfj/record_store

288M 12D3KooWSgzk2az6HDoUhBtGoFGR5UubkQG8VBgagGRtEU5YVPcH/record_store

ubuntu@ip-172-31-6-177:~/.local/share/safe/node$ ls -1 */record_store | wc -l

9681

ubuntu@ip-172-31-6-177:~/.local/share/safe/node$ ps -o rss= -p $(pgrep safenode)

29844

31164

39636

29396

25704

33804

39216

30368

32268

34284

28040

27188

38760

30016

24132

ubuntu@ip-172-31-6-177:~/.local/share/safe/node$ df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/root 7941576 6815708 1109484 87% /

tmpfs 490044 0 490044 0% /dev/shm

tmpfs 196020 836 195184 1% /run

tmpfs 5120 0 5120 0% /run/lock

/dev/xvda15 106858 6182 100677 6% /boot/efi

tmpfs 98008 4 98004 1% /run/user/1000

Looks like you have 2 duds up there (4.0K), yet they are using memory.

This is true,

I will kill them after Ive had my tea

Just wanted to preserve that sentence.