Had my tea, killed my nodes, now looking for bother to cause.

Do we still have creep?

My records are up to 1252 and my memory has dropped to 45.7MB

Seems stable and much improved!

Interesting node development…

Checking my node this morning there was a lot of activity, more than I’ve ever seen. Many many GETS with bursts of many PUTS.

RAM was fluctuating between 60MB and 80MB.

Checking just now, a couple of hours later the PUTS and GETS have stopped and memory has dropped to 63MB, but still higher than the earlier max of about 50MB up until yesterday.

After this burst, total PUTS are 19,000 which is ten times yesterday’s 1,900 and current total GETS are over 78,000.

Anyone else noticed this burst of activity?

![]()

I may have been the cause! I killed all 91 nodes I still had running and started another 100. Also, just after, I then started an upload of 1000x1KB files.

The TAIB (Testnet Accident Investigation Board) has completed its preliminary investigations. The interim report will be available shortly.

We remind you that the purpose of these investigations is not to appropriate blame but to establish a root cause and and issue recommendations to prevent a repeat of similar incidents.

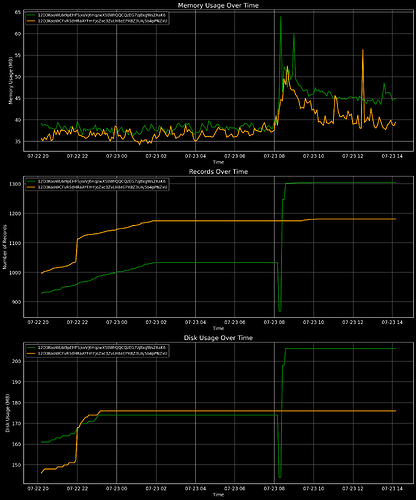

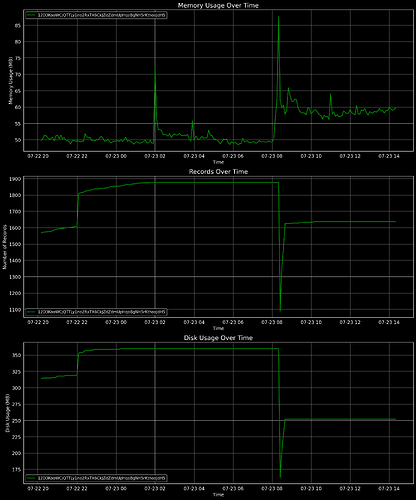

Yes, first set of graphs are 2 nodes on the same SBC, second is a single node on a second (same) SBC.

Is the drop in records and disk usage churn related?

From the 1000x1KB files I uploaded earlier (finishing at 1009 UTC) I had 3 failures of this type:-

Did not store all chunks of file '1KB_998' to all nodes in the close group: Network Error Record not put to network properly.

I’m trying again with the same set of files so I’m guessing that will not result in lots of new chunks stored because the files will be the same. I want to end up with a set of files including a file containing the md5sums of them that I can download and check for errors.

My node continues to look healthy, more PUTS & GETS but RAM is now slowly declining, now down to 53MB which is closing on the earlier max of 50MB.

![]()

This is all great stuff to see. Thanks everyone for reporting, creating and destroying! Great to see how things are holding up here across different node operators!

My node is still healthy but vdash was killed ![]() so I better fix its memory leak now nodes are stable.

so I better fix its memory leak now nodes are stable.

Can a elder please tell this bambino why 1 of my nodes would have 0 cancelled_write_bytes/read_bytes, hugely different to the other 2. (coincidentally also lowest memory used and highest number of records)

------------------------------------------

Timestamp: Mon Jul 24 13:32:12 UTC 2023

Node: 12D3KooWL6i9pEHFSjkoVJ6HqzwX5BWrQQCQzEG7zj8xgWs2XaK6

PID: 26456

Memory used:

46.4844MB

CPU usage:

5.0%

File descriptors:

355

IO operations:

rchar: 6362686983

wchar: 1295516290

syscr: 2164342

syscw: 3315516

read_bytes: 36864

write_bytes: 1325719552

cancelled_write_bytes: 62287872

Records: 1480

Disk usage: 208MB

------------------------------------------

Timestamp: Mon Jul 24 13:32:13 UTC 2023

Node: 12D3KooWCFvR5dHRaAYFmYJoZxc3ZvLH8eEPX8Z3UAj5b4pPNZxU

PID: 26563

Memory used:

41.7734MB

CPU usage:

3.8%

File descriptors:

346

IO operations:

rchar: 9687955372

wchar: 799806599

syscr: 2173154

syscw: 2386931

read_bytes: 0

write_bytes: 829480960

cancelled_write_bytes: 0

Records: 1889

Disk usage: 245MB

------------------------------------------

Timestamp: Mon Jul 24 13:32:35 UTC 2023

Node: 12D3KooWCjQTTLy1no2RxTX6CkJZdZdmUpHqoBgNH5rKthooJdH5

PID: 1458

Memory used:

59.6055MB

CPU usage:

4.6%

File descriptors:

323

IO operations:

rchar: 24796973501

wchar: 3776179918

syscr: 4133185

syscw: 5831273

read_bytes: 22142976

write_bytes: 3858259968

cancelled_write_bytes: 189480960

Records: 1795

Disk usage: 254MBInteresting, i’m not sure off the top of my head.

It is definitely going through the motions, (orange) has been pruned since but still no reads, all three have increased write_bytes only 1 of 3 has increased read_bytes since yesterday, seems folk are mostly putting.

Timestamp: Tue Jul 25 07:47:33 EDT 2023

Node: 12D3KooWL6i9pEHFSjkoVJ6HqzwX5BWrQQCQzEG7zj8xgWs2XaK6

PID: 26456

Memory used: 50.0156MB

CPU usage: 5.1%

File descriptors: 296

IO operations:

rchar: 7338145838

wchar: 1583994554

syscr: 2920002

syscw: 4281796

read_bytes: 36864

write_bytes: 1623707648

cancelled_write_bytes: 62287872

Threads: 7

Records: 1755

Disk usage: 251MB

------------------------------------------

Timestamp: Tue Jul 25 07:47:33 EDT 2023

Node: 12D3KooWCFvR5dHRaAYFmYJoZxc3ZvLH8eEPX8Z3UAj5b4pPNZxU

PID: 26563

Memory used: 48.0664MB

CPU usage: 3.7%

File descriptors: 504

IO operations:

rchar: 10329365732

wchar: 1045417304

syscr: 2920285

syscw: 3139801

read_bytes: 0

write_bytes: 1085640704

cancelled_write_bytes: 0

Threads: 7

Records: 936

Disk usage: 110MB

------------------------------------------

Timestamp: Tue Jul 25 07:48:36 EDT 2023

Node: 12D3KooWCjQTTLy1no2RxTX6CkJZdZdmUpHqoBgNH5rKthooJdH5

PID: 1458

Memory used: 69.5039MB

CPU usage: 4.6%

File descriptors: 333

IO operations:

rchar: 25963747921

wchar: 4045157155

syscr: 4886844

syscw: 6692552

read_bytes: 49225728

write_bytes: 4138852352

cancelled_write_bytes: 189480960

Threads: 7

Records: 1303

Disk usage: 176MBthe real question of the meaning of life is: when moon?

To predict moon, pinpoint lambo and subtract an hour.

Where we are going, when mars and beyond? Moon is not enough.

I feel like this one has run it’s course and I’ll be bringing this down shortly.

Thanks everyone for their participation and analysis! ![]()

![]()