Ah but I want to eventually feed it lists of someone elses uploads ![]()

We need crystal clear instructions in the OP to wipe {SAFEHOME}/client and {SAFEHOME}/node but preserve {SAFEHOME}/tools.

When I downloaded the list from DeusNexus I simply replaced contents of the uploaded_files file. I am lazy and it works ![]()

Did the same

I understand, but what do I do to run the client and get SNT?

How do I delete all these files?

Try rmdir C:\Users\gggg\AppData\Roaming\safe -r -Force

Unfortunately no response to this command ![]()

I don’t think it gives a response - your files will be deleted though

try dir /s C:\Users\gggg\AppData\Roaming\safe

It should return zero

years since I used Windows though…

It worked, now I can download the tokens.

Thank you for your help guys ![]()

error: unrecognized subcommand 'file'

error: ‘s’ suffix missing in command

Good spot. I’ll amend it.

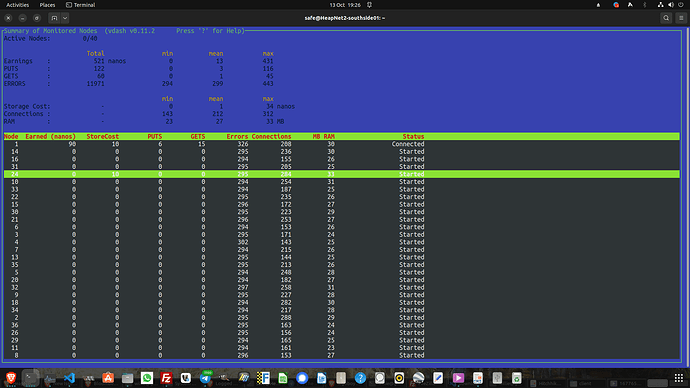

It’s doing all your chunks at once? the default is 20, I think. So that’s buggy. ![]() can you send me your logs for any run that is doing this please

can you send me your logs for any run that is doing this please ![]()

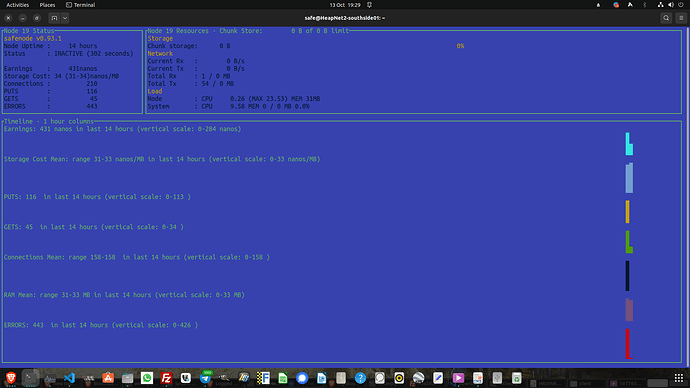

This may well be the “lost node” situation we talk about in the OP. That we removed some code for which seems to have been mem-leaky.

Seems like there may be other workarounds for this that we can test out to avoid needing to re-add it.

Do we need to call the dir maidsafe instead of safe? I don’t understand why anyone is putting tooling in a directory we’re frequently recommending wiping?

Honestly, it is very helpful for us to have one concrete place to put all files. And one concrete place to erase. If we’re having to caveat that, something may fall through the cracks?

Are we saying that users want a safe dir in that location on all machines? Or just that there is a safe dir somewhere standard which is handy? (The current safe folder is, I believe, normally a vendor specific lodation is what I mean… so perhaps our naming makes that unclear that it’s perhaps not for human consumption?)

ie: Would ~/safe not be a better location to place tooling? And its away from maidsafe tools?

(Probs worth a real discussion of where tooling / safe code etc should live. Don’t really want to distract the topic, but folk keep asking us to clarify this in the OP, but for me… the whole point is we have one folder to erase to start fresh, not a list to keep up to date… )

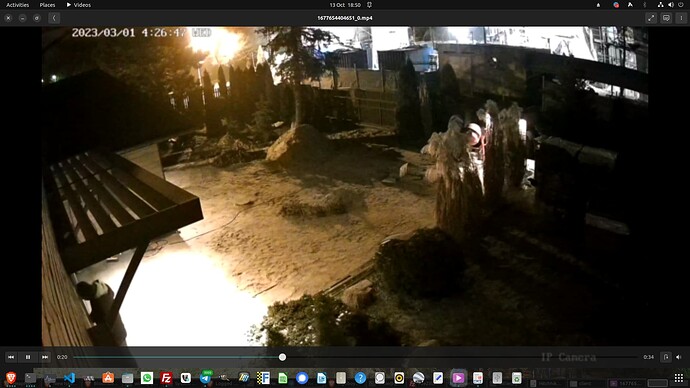

I uploaded a 10.2 MB mp.4 file to the web in 22 s, which was split into 22 parts:

PS C:\Users\gggg> safe files upload E:\gggg\Videos\1677654404651_0.mp4

Logging to directory: "C:\\Users\\gggg\\AppData\\Roaming\\safe\\client\\logs\\log_2023-10-13_18-06-41"

Built with git version: 9888b2c / main / 9888b2c

Instantiating a SAFE client...

Trying to fetch the bootstrap peers from https://sn-testnet.s3.eu-west-2.amazonaws.com/network-contacts

Connecting to the network w/peers: ["/ip4/157.245.35.30/tcp/33271/p2p/12D3KooWBr3v6fm2EMjRmGEJDV7kYnFiZs1Lh1tpKM2frGMnH15P",

(...) "/ip4/188.166.149.100/tcp/45039/p2p/12D3KooWB4LrRjPRWrYq7sN3cHCVfLa6pWXYNcrVKjd1eTtUHSaT"]...

🔗 Connected to the Network Chunking 1 files...

Input was split into 22 chunks

Will now attempt to upload them...

Uploaded 22 chunks in 45 seconds

**************************************

* Payment Details *

**************************************

Made payment of 0.000065627 for 22 chunks

New wallet balance: 99.999934373

**************************************

* Verification *

**************************************

22 chunks to be checked and repaid if required

Verified 22 chunks in 5.8284301s

Verification complete: all chunks paid and stored

**************************************

* Uploaded Files *

**************************************

Uploaded 1677654404651_0.mp4 to 9b69ce01fdd7c47848249f5effce737a19f58414a9abad8ea26b93e2f9c4e8c9

The download took 7 s, but only 21 parts were downloaded from the network - I have reported this in previous tests, is this not a bug?

PS C:\Users\gggg> safe files download

Logging to directory: "C:\\Users\\gggg\\AppData\\Roaming\\safe\\client\\logs\\log_2023-10-13_18-50-33"

Built with git version: 9888b2c / main / 9888b2c

Instantiating a SAFE client...

Trying to fetch the bootstrap peers from https://sn-testnet.s3.eu-west-2.amazonaws.com/network-contacts

Connecting to the network w/peers: ["/ip4/157.245.35.30/tcp/33271/p2p/12D3KooWBr3v6fm2EMjRmGEJDV7kYnFiZs1Lh1tpKM2frGMnH15P",

(...)

"/ip4/188.166.149.100/tcp/45039/p2p/12D3KooWB4LrRjPRWrYq7sN3cHCVfLa6pWXYNcrVKjd1eTtUHSaT"]...

🔗 Connected to the Network

Attempting to download all files uploaded by the current user...

Downloading 1677654404651_0.mp4 from 9b69ce01fdd7c47848249f5effce737a19f58414a9abad8ea26b93e2f9c4e8c9

Client (read all) download progress 1/21

Client (read all) download progress 2/21

Client (read all) download progress 3/21

Client (read all) download progress 4/21

Client (read all) download progress 5/21

Client (read all) download progress 6/21

Client (read all) download progress 7/21

Client (read all) download progress 8/21

Client (read all) download progress 9/21

Client (read all) download progress 10/21

Client (read all) download progress 11/21

Client (read all) download progress 12/21

Client (read all) download progress 13/21

Client (read all) download progress 14/21

Client (read all) download progress 15/21

Client (read all) download progress 16/21

Client (read all) download progress 17/21

Client (read all) download progress 18/21

Client (read all) download progress 19/21

Client (read all) download progress 20/21

Client (read all) download progress 21/21

Client downloaded file in 7.5334988s

Saved 1677654404651_0.mp4 at C:\Users\gggg\AppData\Roaming\safe\client\downloaded_files\1677654404651_0.mp4

However, if I want to use a specific directory to save the file to after downloading, I get an error - am I saving the directory/name wrong?

PS C:\Users\gggg> safe files download [E:\gggg\Videos\1677654404651_0.mp4]

Logging to directory: "C:\\Users\\gggg\\AppData\\Roaming\\safe\\client\\logs\\log_2023-10-13_18-13-53"

Built with git version: 9888b2c / main / 9888b2c

Instantiating a SAFE client...

Trying to fetch the bootstrap peers from https://sn-testnet.s3.eu-west-2.amazonaws.com/network-contacts

Connecting to the network w/peers: ["/ip4/157.245.35.30/tcp/33271/p2p/12D3KooWBr3v6fm2EMjRmGEJDV7kYnFiZs1Lh1tpKM2frGMnH15P",

(...)

"/ip4/188.166.149.100/tcp/45039/p2p/12D3KooWB4LrRjPRWrYq7sN3cHCVfLa6pWXYNcrVKjd1eTtUHSaT"]...

🔗 Connected to the Network Error:

0: Both the name and address must be supplied if either are used

Location:

sn_cli\src\subcommands\files.rs:105

Suggestion: Please run the command again in the form 'files upload <name> <address>'

Backtrace omitted. Run with RUST_BACKTRACE=1 environment variable to display it.

Run with RUST_BACKTRACE=full to include source snippets.

I have tried many times without brackets etc, how should I save the directory and location?

I totally understand that – {SAFEHOME}/tools came about as a result of late-night discussions with @chriso.

I am not welded to the idea of {SAFEHOME}/tools but I am welded to the idea of a commonly accepted destination for /tools.

@happybeing s vdash being an exception of course as it is Rust and installs itself in .cargo/ - as will other tools written in Rust as they emerge. Its not too late to ditch {SAFEHOME}/tools but we need an agreed alternative destination for non-Rust tooling.

KISS

Just let the downloads be saved to {SAFEHOME}/client and then move them to the reqd destination later - Precise download destinations will come along soon but for now this is easier. IMHO

I’ve added a note and the logs in my post with the updated info on the upload and how it ended. Thanks Josh!