Agree, as things are the idea many had of supplying terabytes of disk space seems unlikely.

Which I think is well aligned with the goals and values of the network. Favouring many smaller cheaper devices over fewer larger more expensive devices. Which also fits better with utilising spare resources in existing equipment over purpose built state of the art setups.

I’m certainly happier that the current design seems back to the vision that even mobile phones on charge overnight could take part, whether that turns out to be viable, it does seem possible again.

Agreed, just needs to be noted as I am not sure everyone has realized yet.

Keen to find some calculation on what a particularly setup could run. The big obstacle that rears its ugly head again is the good old router.

Router, an obstacle between end user and internet.

Are you looking for this?

Yes thats the post.

I am no network engineer but a 100€ router claiming 900k+ seems fishy that is the realm of enterprise level routers?

Would be great if the claim is true, I will be on the side of skepticism for now.

It is this: MikroTik Routers and Wireless - Products: hAP ax²

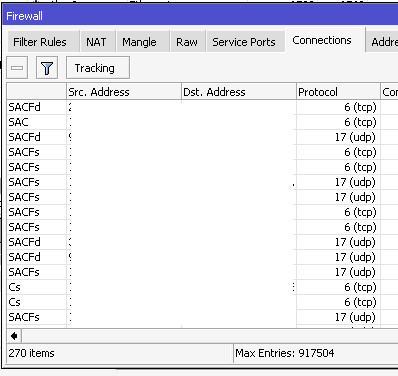

I haven’t tested its limits, maximum I had was around 65k connections but it was handling it like nothing, around 10% CPU if I remember correctly.

Really interested in how hard you can push it without issue.

I have been eyeing this mikrotik myself but I will need some solid proof such a price tag is required.

I have worked with them and CCR2004 has good performance for the price. It is “cheap” HW compared to Cisco, Juniper,… They definitely cut corners in some places, but it is “good enough” for many people.

What i find worst is the SW. As soon as you want to use more complicated setups, you will hit bugs. Not a specific problem of CCR, Mikrotik SW in general works sometimes in mysterious ways.

The whole discussion was started with this post:-

Mikrotik are quite respectable. I’ve used them at work. I have my eye on a MikroTik Routers and Wireless - Products: RB5009UG+S+IN

if I upgrade my home internet to a 1Gb full fibre line.

My current router is a Turris - omnia

but not the Wi-Fi 6 one. Just the older Wi-Fi one with only 3 aerials on the back.

It claims active connections can go up to 65,536. I had 20,000+ active connections when I tried to run 100 nodes on this testnet. But by then my ADSL up was floored.

The bandwidth on the ADSL is 80Mb/s down / 20Mb/s up and it does get that. But what was being used by the port the machine with the safenodes on wasn’t anywhere near those limits - it was about a steady 10Mb/s down and 5Mb/s up so I think it was the number of connections that buckled it. Netflix lost the plot and websites were struggling to load. Bear in mind that Netflix is connected through the other port on the Draytek ADSL modem.

So it wasn’t the Turris router that was struggling with the number of connections - it was either the Draytek or the BT equipment at their end doing something.

I did try the BT router they supplied but that gave up at a much lower number of connections - about 10,000.

So this is my point: before most people’s bandwidth is being stretched and definitely before they run out of RAM, CPU, or disk they will hit a limit somewhere with the number of connections and therefore nodes they can run. IT cognoscenti such as ourselves can throw as much money, time and expertise at this as we want but most peeps can’t or won’t.

I’m going to bet that the number of connections most people can have running from home on basic routers is about 5,000. Which on current showing is about 20 nodes worth. Which is fine if that is what it is. But it would be shame if the storage that can be allocated to a Node is fairly low like 10GB and therefore all they can contribute is 200GB. That’s not a lot these days. I think most people who would be willing to run some nodes would be able to rustle up a bit more storage than that! Something that you can’t realistically connect a few TB would look a bit old fashioned.

(As a side note - If this whole thing takes off I wouldn’t be surprised if most ISPs look askance at people consistently keeping open hundreds of times the number of connections a household would normally have.)

Edit: and I forgot to mention that before Netflix broke a lot of the safenodes broke anyway: they were deciding they were behind a NAT. So they were clearly having issues connecting to other nodes.

You are right, a lot of people will hit limits of cheap old HW. But new more demandníng SW made people upgrade their computers since the begining of computers began to exist. Othewise we would be still using 5.25" floppy disks and 64 kbps modems.

Netflix you mentioned is a good example, a lot of people had to upgrade their interne connection, because they wanted netflix at maximum quality. Some ISPs tried to block or limit Netflix “because it uses unfair amouts of data”, but it didn´t become mainstream practice, majority upgraded their bacbone HW and upstream links.

Anyway, this debate about number of connections is nice, but I hope it is only a temporary issue. Once safenetwork switches to UDP, and I hope it will, the workload for routers will be different.

mikrotik have placed themselves as alternatives to the commercial market place and entering the enterprise market place. Alternative to CISCO and others.

I have used them before and the features rival components priced 2 to 10 times the price (depending on the mikrotik component)

Number of connections has more to do with amount of memory and the actual processor. Considering these processors are very low cost in quantity, the actual processor used has more to do with penny pinching (profits) than real cost of final product.

EDIT: also chip manufacturers like Microchip produce chips to provide the switching fabric for router/switch units which frees up the CPU to do actual routing.

Just want to point out that I have edited my post higer up.

Sorry I was not at infront of the numbers and guestimated a bit too high.

The edited number is calculated from the nodes I ran in the last test.

And led us to a tens-of-gigabytes games, half-gigabyte text editors and tens-of-megabytes websites.

HW is cheap, good programmers are not. I definitely don´t like it, but seeking perfection doesn´t usually go along with making money, most people/companies seek the easiest way to do things.