Interesting!

Everybody joining causing lot’s of churn?

i already terminated the nodes from home systems I just started with the last release and logs are gone with he script I am using.

but i just noticed they were getting get and puts but no rewards so i started uploading a linux iso to see if i would get some rewards but it started to do the re uploading error message.

possible test would be if every one running nodes from home with last release kill there nodes and see if uploads resume and things recover ?

This will be the case until the Register address is stored and be reused which may be a way off yet, and may wait until the personal Safe/Account Packet is in use because that will store things like that.

Gabriel is working on the next PR now, which will use a Register pointing to a chunk per file to hold metadata and pointer to datamap, so that will come first and not change functionality much I think.

upload i was nearly finished is now completely stuck in the mud with 11 chunks to go

ubuntu@safe-hamilton:~/safe/images$ safe files upload -p linuxmint-21.2-mate-64bit.iso

Logging to directory: "/home/ubuntu/.local/share/safe/client/logs/log_2024-02-15_13-01-38"

Built with git version: 358f475 / main / 358f475

Instantiating a SAFE client...

Trying to fetch the bootstrap peers from https://sn-testnet.s3.eu-west-2.amazonaws.com/network-contacts

Connecting to the network with 48 peers

🔗 Connected to the Network "linuxmint-21.2-mate-64bit.iso" will be made public and linkable

Starting to chunk "linuxmint-21.2-mate-64bit.iso" now.

Uploading 111 chunks

⠠ [00:04:23] [----------------------------------------] 0/111 ⠂ [00:04:25] [----------------------------------------] 0/111 ^C

ubuntu@safe-hamilton:~/safe/images$

I started 100 nodes 15 minutes ago.

iv been having a feeling for some time that when ever i start a but load of nodes at the same time it was usually the beginning of the end for the testnet.

got to check out now got a flight to catch look forward to seeing how this pans out.

Me too, attempting take off #2. ![]()

If I remember right it had also stabilized and gotten to be quick and normal after some time. Let’s see.

Looks much better now indeed. Already getting “puts” in vdash, so the nano’s will be coming up soon.

I start nodes one at a time with a delay between them. Currently I am using 12s interval, in older testnets it helped to spread network load flat instead of creating saw pattern.

If it helps anything, I can make the delay much larger to spread churn in time. Any recommendations @joshuef ?

None at the moment. It’s something we’ll need to feel out I think.

If there is disruption I would hope it’s temporary. But we’ll have to see (and later account for what we find!)

I deleted a couple of files in my test folder and re-uploaded. On download, the deleted files were missing just as they should. Download was superfast, 13 seconds.

Now re-uploading the testfolder (55MB) gave an error for the first time:

Failed to sync Folder (for foldertest/Folder_B) with the network: Network Error GetRecord Query Error RecordNotFound.

Again, took a long time:

real 5m1,196s

user 0m46,099s

sys 0m17,555s

For comparison, uploading a file about the same size (60MB) for the first time is also slow. It had 6 chunks failing and retrying:

real 7m17,896s

user 1m29,436s

sys 0m32,929s

It also said :

Unverified file "Waterfall_slo_mo.mp4", suggest to re-upload again.

And on re-upload trials, it seems that 2 chunks are just not going to go up. I’v tried 2 times now.

EDIT: At the moment uploads are slow and getting the last chunks up is diffcult, but succeeds after a few retrials. Downloads continue to be extremely fast.

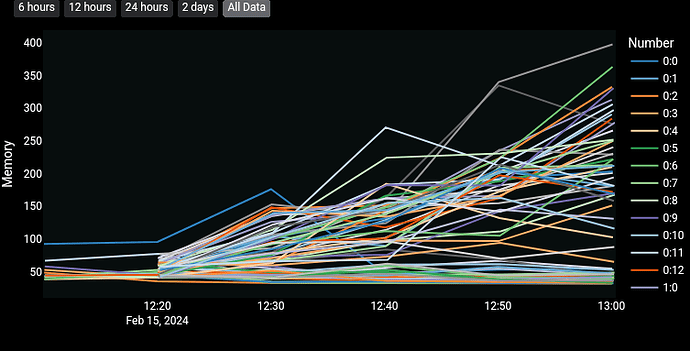

It did stabilise with memory use but I was always getting the same failed chunks on my uploads.

Not sure if that’s where the problems originated lots of nodes join then uploads start having bad chunks.

Yeah, I wonder why there are these difficult to store chunks? Maybe bad nodes? And with churn they eventually find their way into good nodes?

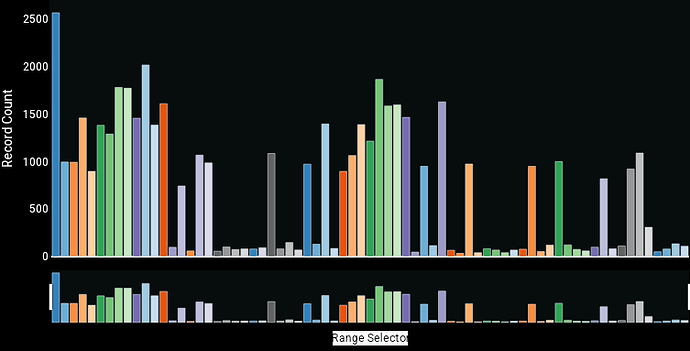

Unlike the last test nodes now do pass the 2048 limit, I have one packing 2500 records.

The distribution was a lot more even with the last net though.

Thanks so much for this wonderful testnet! ![]()

Also, the comedy has been wonderful. Not only are you all saving the world, you are funny as well! ![]()

![]()

I don’t know if the entry of new nodes is to blame but there is a clear drop in upload performance.

I was able to upload over 61000 chunks in less than six hours but it took over an hour to upload just over five hundred.

Uploading 63024 chunks

⠈ [05:58:20] [######################################>-] 61021/63024

Retrying failed chunks 2003 …

⠒ [06:57:53] [#######################################>] 61574/63024

⠤ [07:06:57] [#######################################>] 61665/63024

⠓ [07:07:23] [#######################################>] 61670/63024

⠄ [07:07:28] [#######################################>] 61671/63024 ⠈

[07:07:36] [#######################################>] 61673/63024