How about uploading? Is that going to be streaming too?

@chriso I am finding that the newly upgraded nodes have many times the records being stored compared to the node before the upgrade.

Watching the metrics stats and nodes would go from single digit numbers of records to like 40, 50, 60, 70, 80, and even some in the high hundreds.

Any ideas? Is this one of the changes were records are being stored in more nodes?

How’s CPU and mem usage with this release vs the last one with the same node count?

Nothing major in CPU/Mem usage. Maybe up to 10% more, but not really noticeable.

When nodes start they always use more CPU/Mem/Connections till they settle down

Note that for non-streaming duties, the chunk-streamer library is currently a bit slower (due to how it fills/drains its buffer). For small/medium files, you won’t notice it, but if the number of chunks is more than your download threads, then it becomes more obvious.

In short, the algorithm could make better use of the thread pool. It’s on my todo list to improve.

On the bright side, memory is constrained by the download thread pool size and it is stable.

I notice exactly the opposite: I’m using 60% more RAM with the new version (and maybe 30% more CPU).

Also, the older my nodes are, the more CPU/RAM they use (and also more connections).

Is this an hour or more after upgrading? I upgrade at 90 seconds between nodes being upgraded to give some time for the very initial inrush of cpu/mem/connection activity

I am looking at 2 of my SBCs (250 nodes each) and not seeing that. And if it was doing that then I would have loaded down CPU and memory wise SBCs. That is the 250 nodes use 33% of the cpu and 60% more would have them over 50% usage and that would be quite noticeable

These nodes are months old

That shows some variance in node behaviour

incase any one needs a smaller file here is arch linux 1.2Gb

ant file download --retries 5 9875177d76c9768edbabe048ad2b2846b8a9de0286bd5e1097813cc0dc75128f . #md5sum 332f59c2ff80f97c13e043e385a2841e archlinux-2025.04.01-x86_64.iso 1.2Gb

I agree with @neo

looks good to me some one pulled the plug on several 100k nodes in one go and load averages went up high for a while then its all settled back down to normal. I am upgrading nodes with 5 min delay as no need to rush it.

load averages for last 24 hours

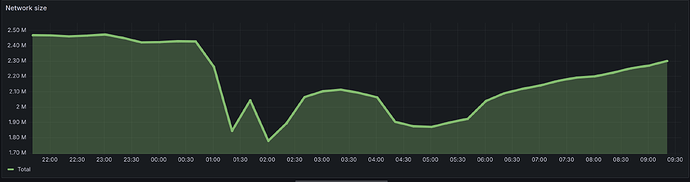

network size last 24 hours

also looking at network size rebounding fast like they overloaded there setup and had to pull the plug and are starting nodes more slowly on the second attempt ![]()

What interests me the most and wonder if others have noticed it, is the increase in records being stored. I sat there watching my nodes upgrade for 10 minutes and as each node upgraded it goes from storing up to 10 records to many times that within a couple of minutes. So this wasn’t the network losing nodes at the same time of each node upgrading since the other nodes did not increase until they upgraded.

I guess nobody said anything about not pulling the plug on 600k nodes in one go ![]()

This is several hours after upgrading.

My nodes having increased CPU usage over time is something that I noticed on the 2 (at least) last versions (and they gradually increase every day).

not seeing it here I am getting records stored from metrics and relevant records are named as live records to save space in the table header. these are my upgraded nodes.

Over 4 machines, it is not something I am seeing. Wonder what the difference is?

That is odd indeed.

I’ve seen it on all of my SBCs and on the PC. Also 2 different ISP accounts.

Wonder why? Did something change in /metrics and the records stored figure has changed somehow?

all my relevant records on upgraded nodes are showing zero at present so perhaps im not seeing it and need to update Ntracking

Relevant records will only show once a quote is done. And only updated each time a quote is done. Not even a store updates it.

That means the uploader gets a quote which update the figure and then the store occurs and the figure still shows the old number and not the incremented number due to the store

Actually just looking and one node already has quoted once and the relevant records is a modest 5. So the increase is not really due to relevant records

Tbf, since only the latest version earns tokens, i can see why some big operators may move quicker than they otherwise would.

It would probably be better to continue payments to the previous version, assuming that hasn’t changed back again.

Or give a 3-day grace period.

having a transition phase of 1-2-3 days would probably be enough to motivate large operators to implement a “rolling upgrade” replacing nodes one by one … earnings there would be higher than doing a hard reset and starting ramp up again from 0 nodes and making earnings go to 0 for a short moment too …

haha - sometimes @born2build does make reasonable comments too ![]() …

…

“…minds think alike” xD does this make you iq42 too born? ![]()