Yeah really must get around to changing that hostname - its actually originally a snapshot that got migrated to a slightly bigger box

yes it was all self inflicted problems by @Southside ![]()

It’s not necessarily self-inflicted. The default retry value might be too low.

Edit: @aatonnomicc Might be worth providing some smaller files to test with too.

The local box was self-inflicted with the alias - any probs on the VPS need to get looked at carefully

I’ll tell you in a bit…

Fetching chunk 981/1497 ...

Fetching chunk 977/1497 [DONE]

Fetching chunk 982/1497 ...

Fetching chunk 969/1497 [DONE]

Yeah, that’s the issue if all the chunks downloaded.

Sorry, but there will be a fix for this soon. Try with files that are not so big.

Can you still update your original post please to indicate trying with a larger value for --retries?

Yeah I’m waiting for the download on the other VPS to complete/fail first

OK thanks.

Btw, in general, results or experience from home connections would probably be of more interest.

Im running it from home now - on a 500Mb/s fibre line

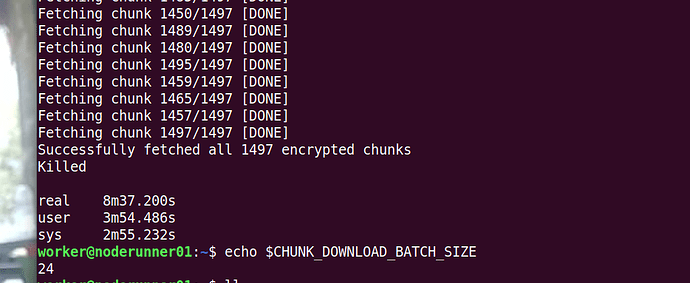

CHUNK_DOWNLOAD_BATCH_SIZE =24 retries set to 20

Fetching chunk 1463/1497 [DONE]

Fetching chunk 1458/1497 [DONE]

Successfully fetched all 1497 encrypted chunks

Successfully decrypted all 1497 chunks

Successfully downloaded data at: 8d715daa99904615210449f53681c2f9f4bce871d5fc1312d2b571a537a5bb5d

real 5m20.452s

user 3m10.369s

sys 2m39.143s

And on both 8GB VPSes, the 6GB download was too big and job was killed - but it was quick and humane…

Fetching chunk 1463/1497 [DONE]

Fetching chunk 1458/1497 [DONE]

Successfully fetched all 1497 encrypted chunks

Successfully decrypted all 1497 chunks

Successfully downloaded data at: 8d715daa99904615210449f53681c2f9f4bce871d5fc1312d2b571a537a5bb5d

real 5m20.452s

user 3m10.369s

sys 2m39.143s

OK, thanks. That’s a known issue.

Would appreciate if you could still edit your original post as it’s quite early in the thread.

OK done now

Cool, thanks. You didn’t mention using --retries there? Did you not have to modify that?

JUst double-checked - that downloaded OK without any --retries parameter

could we possibly set a default retries value of say 10?

I already discussed raising the value. I will bring it up again. I only expected to have issues with it on poorer home connections.

I was going to ask about this. I have 2 files one over 10TB and the other many times that size, that one day I want to upload. Won’t be downloading as a whole file but just individual chunks (its more of indexing files for some maths I want to do) which means the downloading should be fine. GAS just costs too much at this time. ![]() But it would certainly help put some data onto the network.

But it would certainly help put some data onto the network.

Its purely binary, numbers, and I can easily chop it up and encrypt it and pass it to be uploaded without self-encryption. (I mod the client to do separate chunks as if they are a file, which it is actually) And then I would have my own datamap of sorts so uploaded as private and make the “datamap” which indexes the index file LOL as a public file

Sorry, I’m not sure I see a question here exactly?

More of a comment. I was going to ask about in memory only or not. But you answered it so I changed my post.

This is good to hear. I was going to start working on adding the chunk streaming stuff that @Traktion implemented into Colony. Are you taking a similar approach? Any ETA on when this will be available?

Hey, sorry, I’m not sure exactly on the approach we are going to be taking. I can get back to you on that. Regarding the ETA, now that uploads/downloads are looking pretty reliable, we will be looking to get this fixed very soon. People not being able to download larger files is not a good look for us. I think a hotfix release next week would be very likely.

Thanks, that would be great. From an app perspective, having this be a stream would be nice so I can show download progress to the user in a status bar vs a spinner.