Prooobably we could make this simpler if we had a list of “recommended peers” published at some addr we pulled. That’d remove SAFE_PEERS part, and logging these days unless we have issues I’m aiming to nod need full trace logs (we can probably remove that from the post for future testnets all being well)

In terms of data spread, there are no nodes storing 0 chunks so far, that’s a fair bit healthier than previous.

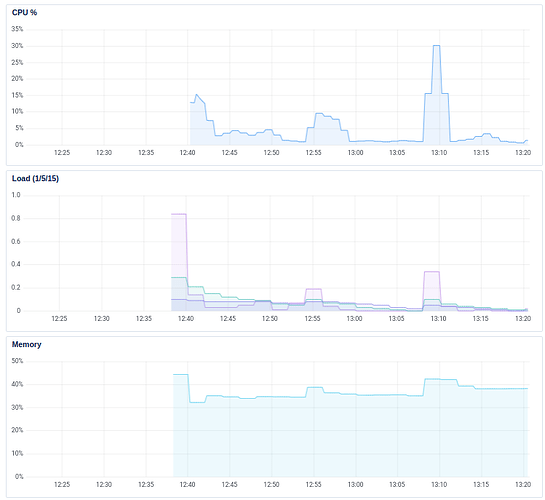

Mem seems to be peaking at a few hundred mbs (~250). We’ve lost some nodes there, but the mem isn’t runnaway (many nodes hit that and come back down); so those lost nodes are due to overcrowding I’d say (which is expected for this testnet). We’ll see how many per machine we end up with!

CPU usage across nodes seems fine (0-10% from whaet I’ve seen).

If you’re starting nodes, please do let us know how CPU/Mem are looking and how many records you have in your record_store ![]()

I was also planning to add something like this to safeup:

safeup node --service

To set it up as a service.

It would still need to provide the initial peer from somewhere though. Publishing that to a location that the node could read would be quite a cool feature I think. We could easily update that as part of a testnet deployment.

Right now CPU is at 0-1%, RAM is at 60-110MB, record count is 1230.

Looking good from home but my AWS instance fails

The client still does not know enough network nodes.

Storing file "records.html" of 890 bytes..

Did not store file "records.html" to all nodes in the close group! Network Error Outbound Error.

Storing file "upload.html" of 1146 bytes..

Did not store file "upload.html" to all nodes in the close group! Network Error Outbound Error.

Storing file "learning_endpoints.py" of 174 bytes..

Did not store file "learning_endpoints.py" to all nodes in the close group! Network Error Outbound Error.

Storing file "app.py" of 3045 bytes..

Did not store file "app.py" to all nodes in the close group! Network Error Outbound Error.

Storing file "distutils-precedence.pth" of 152 bytes..

Did not store file "distutils-precedence.pth" to all nodes in the close group! Network Error Outbound Error.

can someone remind me what ports I should be opening on AWS, please?

Also I get this when I run the node on AWS - do I have the security groups wrong or is this a NAT detection false positive?

Logging to directory: "/home/ubuntu/.local/share/safe/node/12D3KooWRHmqkvDv9RP9DXEvu3ChNboeo8aYuokv93QCbpUzdtM7/logs"

Using SN_LOG=all

Node is stopping in 1s... Node log path: /home/ubuntu/.local/share/safe/node/12D3KooWRHmqkvDv9RP9DXEvu3ChNboeo8aYuokv93QCbpUzdtM7/logs

Error: We have been determined to be behind a NAT. This means we are not reachable externally by other nodes. In the future, the network will implement relays that allow us to still join the network.

Location:

sn_node/src/bin/safenode/main.rs:318:36

Failed the begblag test from home … ![]()

willie@gagarin:~$ safe files download begblag.mp3 3eb0873bd425e5599d72c2873ca6e691d5de5c75bbc89f2e088fa95e3390927a

Built with git version: 95b8dad / main / 95b8dad

Instantiating a SAFE client...

⠁ Connecting to The SAFE Network... The client still does not know enough network nodes.

🔗 Connected to the Network Downloading file "begblag.mp3" with address 3eb0873bd425e5599d72c2873ca6e691d5de5c75bbc89f2e088fa95e3390927a

Client download progress 28/31

Did not get file "begblag.mp3" from the network! Chunks error Not all chunks were retrieved, expected 31, retrieved 28, missing [23f2ab(00100011).., 15dbaf(00010101).., b5e01a(10110101)..]..

Works fine from an AWS instance though ![]()

EDIT spoke too soon - last time I checked begblag was ~15Mb

ubuntu@ip-172-31-45-225:~$ safe files download begblag.mp3 3eb0873bd425e5599d72c2873ca6e691d5de5c75bbc89f2e088fa95e3390927a

Logging to directory: "/tmp/safe-client"

Using SN_LOG=all

Built with git version: 74528f5 / main / 74528f5

Instantiating a SAFE client...

🔗 Connected to the Network Downloading file "begblag.mp3" with address 3eb0873bd425e5599d72c2873ca6e691d5de5c75bbc89f2e088fa95e3390927a

Successfully got file begblag.mp3!

Writing 2499 bytes to "/home/ubuntu/.safe/client/begblag.mp3"

2499 bytes looks iffy to me…

I have 1,580 records.

This would be great!

How are you guys setting multiple nodes per instance up, it is not in the tool yet is it? Seems manually doing so over 100 droplets is going to be a schlep.

The support for that is on a branch that Josh has, but it’s not the mechanism we’ll be using long term.

Angus has been working on extensions for the testnet tool, which will include the ability to deploy multiple nodes on one machine, but because he’s still getting to grips with how it all works and is fairly new to this kind of work in general, the pace has been a bit slow.

@aatonnomicc can you repeat this and check the downloaded file size please?

I just tried again from home and got a sensible file size - and it plays OK ![]()

Downloading file "begblag.mp3" with address 3eb0873bd425e5599d72c2873ca6e691d5de5c75bbc89f2e088fa95e3390927a

Client download progress 31/31

Successfully got file begblag.mp3!

Writing 15766382 bytes to "/home/willie/.local/share/safe/client/begblag.mp3"

Anybody had success from an AWS node - and if so, can you share your security group rules, please?

I am told I am behind a NAT with a t2-micro in eu-west-1 - Previously worked fine, so its a daft mistake somewhere on my part.

I think there isn’t much alternative but to open the whole 16-bit range, so TCP, 0 - 65536.

The nodes are all running on random port numbers which are 16-bit integers.

That works ![]()

570 + chunks already

ubuntu@ip-172-nn-nn-nn5:~/.local/share/safe/node/12D3KooWP8zCQ3pKe744Pcfw9WfzYY6AMquwyuYCHTVyMYkRAorC$ ll record_store/|wc -l

574

I was a bit reluctant to open these ports, never had to do that before.

is it no longer possible to specify a port with --port=12000 or whatever it may be?

Am I being daft?

rock64@one:~/.local/bin$ ls

safe safenode

rock64@one:~/.local/bin$ safenode --log-output-dest data-dir

safenode: command not found

Answer is yes I am, was not in my PATH

@Southside

Edit: I just started a node behind NAT with --port=xxxxx and it appears to work, has not bailed yet.

Edit :

sure does work 8Gb disk full already

I’ll bring my 8 smooth running nodes down to add more storage.

You can specify the port you want to run on, yeah.

I think that range was for the purpose of communication with other nodes. It may have just applied to us running lots of nodes on AWS, but I remember having a discussion with Benno and Angus about it.

it worked with one but I am having difficulties with a second, Not enough peers in the k-bucket to satisfy the request what does that mean?

Hmm, I’m not sure. That sounds like the sort of thing you would expect if you were running those nodes just in a local network.

Would need to defer to Josh on that one.