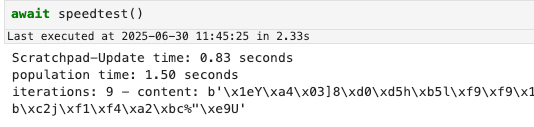

In colony I started noticing some different errors related to pointers and scratchpads. I hadn’t seen these before the last update. I’ve only had this batch of errors happen once, so maybe it was a one off, but I had never seen them before. Sometimes I have to hit the pointer/scratchpad update several times for it to take, whereas before it always ‘just worked’. I’ll also note that updating these 2 mutable types takes on average twice as long as it did before the update. I notice th,ese problems on main net, alpha and local are working fine for me:

2025-07-02T01:52:11.911989Z ERROR ThreadId(04) autonomi::client::data_types::pointer: /cargo/registry/src/index.crates.io-1949cf8c6b5b557f/autonomi-0.5.0/src/client/data_types/pointer.rs:288: Failed to update pointer at address aaa518a2cf8260f6bebc769c16b8147ea215adf569696497b7fc1f250823d89a49990187e83fc0f9ae1cf3d44afb7dce to the network: Put verification failed: Peers have conflicting entries for this record: {PeerId("12D3KooWSuTm6wx2myt5BVY6JGXyBZ7eVhYdbeLAub66iKBA5wTV"): Record { key: Key(b"\0\xd5\xa4s\xf9\x10\x9d\xfe\xbd\xdeo\xf6H\x0b_\xee\xf1bX\xa7X\xb4\"9\xe1\xf8;\xd3w\xf9\xc7\xb8"), value: [145, 2, 148, 220, 0, 48, 204, 170, 204, 165, 24, 204, 162, 204, 207, 204, 130, 96, 204, 246, 204, 190, 204, 188, 118, 204, 156, 22, 204, 184, 20, 126, 204, 162, 21, 204, 173, 204, 245, 105, 105, 100, 204, 151, 204, 183, 204, 252, 31, 37, 8, 35, 204, 216, 204, 154, 73, 204, 153, 1, 204, 135, 204, 232, 63, 204, 192, 204, 249, 204, 174, 28, 204, 243, 204, 212, 74, 204, 251, 125, 204, 206, 1, 129, 177, 83, 99, 114, 97, 116, 99, 104, 112, 97, 100, 65, 100, 100, 114, 101, 115, 115, 220, 0, 48, 204, 178, 36, 204, 145, 24, 30, 204, 166, 54, 204, 143, 204, 229, 116, 29, 90, 19, 204, 255, 57, 204, 226, 204, 135, 29, 204, 161, 60, 204, 190, 204, 208, 204, 220, 204, 178, 204, 238, 119, 204, 195, 204, 165, 20, 103, 35, 204, 211, 204, 233, 87, 93, 204, 190, 60, 87, 204, 191, 124, 118, 204, 155, 48, 204, 200, 71, 204, 193, 204, 206, 7, 220, 0, 96, 204, 148, 53, 22, 204, 238, 54, 204, 203, 204, 142, 204, 162, 111, 204, 176, 57, 204, 224, 204, 205, 26, 204, 243, 91, 204, 167, 22, 204, 190, 98, 204, 245, 127, 204, 253, 204, 200, 204, 153, 77, 204, 234, 57, 204, 142, 2, 100, 204, 253, 48, 204, 199, 59, 204, 199, 204, 130, 204, 185, 204, 180, 33, 204, 164, 204, 198, 204, 185, 86, 40, 21, 204, 180, 31, 11, 204, 237, 13, 204, 201, 204, 187, 204, 181, 204, 146, 204, 172, 204, 142, 93, 204, 158, 57, 86, 25, 36, 204, 188, 72, 80, 96, 101, 26, 204, 225, 204, 239, 100, 40, 204, 176, 204, 166, 104, 19, 204, 199, 21, 204, 151, 204, 224, 204, 250, 204, 174, 79, 204, 234, 102, 83, 111, 46, 68, 204, 167, 204, 221, 8, 122, 204, 149, 204, 252], publisher: None, expires: None }, PeerId("12D3KooWLmfdTmmTtKDwcKey28sybA1Kh9SShwAjf84e8pnPsSZT"): Record { key: Key(b"\0\xd5\xa4s\xf9\x10\x9d\xfe\xbd\xdeo\xf6H\x0b_\xee\xf1bX\xa7X\xb4\"9\xe1\xf8;\xd3w\xf9\xc7\xb8"), value: [145, 2, 148, 220, 0, 48, 204, 170, 204, 165, 24, 204, 162, 204, 207, 204, 130, 96, 204, 246, 204, 190, 204, 188, 118, 204, 156, 22, 204, 184, 20, 126, 204, 162, 21, 204, 173, 204, 245, 105, 105, 100, 204, 151, 204, 183, 204, 252, 31, 37, 8, 35, 204, 216, 204, 154, 73, 204, 153, 1, 204, 135, 204, 232, 63, 204, 192, 204, 249, 204, 174, 28, 204, 243, 204, 212, 74, 204, 251, 125, 204, 206, 0, 129, 177, 83, 99, 114, 97, 116, 99, 104, 112, 97, 100, 65, 100, 100, 114, 101, 115, 115, 220, 0, 48, 204, 178, 36, 204, 145, 24, 30, 204, 166, 54, 204, 143, 204, 229, 116, 29, 90, 19, 204, 255, 57, 204, 226, 204, 135, 29, 204, 161, 60, 204, 190, 204, 208, 204, 220, 204, 178, 204, 238, 119, 204, 195, 204, 165, 20, 103, 35, 204, 211, 204, 233, 87, 93, 204, 190, 60, 87, 204, 191, 124, 118, 204, 155, 48, 204, 200, 71, 204, 193, 204, 206, 7, 220, 0, 96, 204, 177, 204, 217, 204, 141, 204, 180, 204, 243, 36, 204, 136, 204, 150, 204, 251, 8, 204, 151, 204, 203, 204, 157, 204, 165, 64, 204, 159, 58, 1, 204, 198, 49, 122, 204, 253, 108, 58, 11, 126, 29, 83, 204, 164, 204, 189, 61, 65, 204, 150, 204, 166, 4, 93, 87, 204, 191, 106, 204, 193, 23, 102, 25, 204, 139, 204, 163, 33, 95, 204, 171, 15, 204, 179, 204, 185, 204, 156, 114, 204, 134, 204, 214, 204, 221, 20, 77, 19, 204, 152, 56, 204, 156, 79, 204, 215, 7, 114, 126, 204, 171, 73, 88, 34, 204, 233, 38, 26, 14, 65, 204, 150, 204, 236, 118, 35, 53, 23, 22, 65, 204, 233, 120, 204, 233, 81, 90, 204, 232, 119, 9, 107, 31, 204, 131, 9], publisher: None, expires: None }}

2025-07-02T01:52:11.913580Z ERROR ThreadId(04) colonylib::pod: /cargo/registry/src/index.crates.io-1949cf8c6b5b557f/colonylib-0.4.3/src/pod.rs:1845: Error occurred: Pointer(PutError(Network { address: NetworkAddress::PointerAddress(aaa518a2cf8260f6bebc769c16b8147ea215adf569696497b7fc1f250823d89a49990187e83fc0f9ae1cf3d44afb7dce) - (acae9b20a0dc6d7da27aa34239d97ee231fd389b014eace164e0171e7fd28969), network_error: PutRecordVerification("Peers have conflicting entries for this record: {PeerId(\"12D3KooWSuTm6wx2myt5BVY6JGXyBZ7eVhYdbeLAub66iKBA5wTV\"): Record { key: Key(b\"\\0\\xd5\\xa4s\\xf9\\x10\\x9d\\xfe\\xbd\\xdeo\\xf6H\\x0b_\\xee\\xf1bX\\xa7X\\xb4\\\"9\\xe1\\xf8;\\xd3w\\xf9\\xc7\\xb8\"), value: [145, 2, 148, 220, 0, 48, 204, 170, 204, 165, 24, 204, 162, 204, 207, 204, 130, 96, 204, 246, 204, 190, 204, 188, 118, 204, 156, 22, 204, 184, 20, 126, 204, 162, 21, 204, 173, 204, 245, 105, 105, 100, 204, 151, 204, 183, 204, 252, 31, 37, 8, 35, 204, 216, 204, 154, 73, 204, 153, 1, 204, 135, 204, 232, 63, 204, 192, 204, 249, 204, 174, 28, 204, 243, 204, 212, 74, 204, 251, 125, 204, 206, 1, 129, 177, 83, 99, 114, 97, 116, 99, 104, 112, 97, 100, 65, 100, 100, 114, 101, 115, 115, 220, 0, 48, 204, 178, 36, 204, 145, 24, 30, 204, 166, 54, 204, 143, 204, 229, 116, 29, 90, 19, 204, 255, 57, 204, 226, 204, 135, 29, 204, 161, 60, 204, 190, 204, 208, 204, 220, 204, 178, 204, 238, 119, 204, 195, 204, 165, 20, 103, 35, 204, 211, 204, 233, 87, 93, 204, 190, 60, 87, 204, 191, 124, 118, 204, 155, 48, 204, 200, 71, 204, 193, 204, 206, 7, 220, 0, 96, 204, 148, 53, 22, 204, 238, 54, 204, 203, 204, 142, 204, 162, 111, 204, 176, 57, 204, 224, 204, 205, 26, 204, 243, 91, 204, 167, 22, 204, 190, 98, 204, 245, 127, 204, 253, 204, 200, 204, 153, 77, 204, 234, 57, 204, 142, 2, 100, 204, 253, 48, 204, 199, 59, 204, 199, 204, 130, 204, 185, 204, 180, 33, 204, 164, 204, 198, 204, 185, 86, 40, 21, 204, 180, 31, 11, 204, 237, 13, 204, 201, 204, 187, 204, 181, 204, 146, 204, 172, 204, 142, 93, 204, 158, 57, 86, 25, 36, 204, 188, 72, 80, 96, 101, 26, 204, 225, 204, 239, 100, 40, 204, 176, 204, 166, 104, 19, 204, 199, 21, 204, 151, 204, 224, 204, 250, 204, 174, 79, 204, 234, 102, 83, 111, 46, 68, 204, 167, 204, 221, 8, 122, 204, 149, 204, 252], publisher: None, expires: None }, PeerId(\"12D3KooWLmfdTmmTtKDwcKey28sybA1Kh9SShwAjf84e8pnPsSZT\"): Record { key: Key(b\"\\0\\xd5\\xa4s\\xf9\\x10\\x9d\\xfe\\xbd\\xdeo\\xf6H\\x0b_\\xee\\xf1bX\\xa7X\\xb4\\\"9\\xe1\\xf8;\\xd3w\\xf9\\xc7\\xb8\"), value: [145, 2, 148, 220, 0, 48, 204, 170, 204, 165, 24, 204, 162, 204, 207, 204, 130, 96, 204, 246, 204, 190, 204, 188, 118, 204, 156, 22, 204, 184, 20, 126, 204, 162, 21, 204, 173, 204, 245, 105, 105, 100, 204, 151, 204, 183, 204, 252, 31, 37, 8, 35, 204, 216, 204, 154, 73, 204, 153, 1, 204, 135, 204, 232, 63, 204, 192, 204, 249, 204, 174, 28, 204, 243, 204, 212, 74, 204, 251, 125, 204, 206, 0, 129, 177, 83, 99, 114, 97, 116, 99, 104, 112, 97, 100, 65, 100, 100, 114, 101, 115, 115, 220, 0, 48, 204, 178, 36, 204, 145, 24, 30, 204, 166, 54, 204, 143, 204, 229, 116, 29, 90, 19, 204, 255, 57, 204, 226, 204, 135, 29, 204, 161, 60, 204, 190, 204, 208, 204, 220, 204, 178, 204, 238, 119, 204, 195, 204, 165, 20, 103, 35, 204, 211, 204, 233, 87, 93, 204, 190, 60, 87, 204, 191, 124, 118, 204, 155, 48, 204, 200, 71, 204, 193, 204, 206, 7, 220, 0, 96, 204, 177, 204, 217, 204, 141, 204, 180, 204, 243, 36, 204, 136, 204, 150, 204, 251, 8, 204, 151, 204, 203, 204, 157, 204, 165, 64, 204, 159, 58, 1, 204, 198, 49, 122, 204, 253, 108, 58, 11, 126, 29, 83, 204, 164, 204, 189, 61, 65, 204, 150, 204, 166, 4, 93, 87, 204, 191, 106, 204, 193, 23, 102, 25, 204, 139, 204, 163, 33, 95, 204, 171, 15, 204, 179, 204, 185, 204, 156, 114, 204, 134, 204, 214, 204, 221, 20, 77, 19, 204, 152, 56, 204, 156, 79, 204, 215, 7, 114, 126, 204, 171, 73, 88, 34, 204, 233, 38, 26, 14, 65, 204, 150, 204, 236, 118, 35, 53, 23, 22, 65, 204, 233, 120, 204, 233, 81, 90, 204, 232, 119, 9, 107, 31, 204, 131, 9], publisher: None, expires: None }}"), payment: None }))

2025-07-02T01:52:30.027683Z ERROR ThreadId(03) autonomi::client::data_types::scratchpad: /cargo/registry/src/index.crates.io-1949cf8c6b5b557f/autonomi-0.5.0/src/client/data_types/scratchpad.rs:104: Got multiple conflicting scratchpads for Key(b"\xea\xb9\x8eY\xdc\x8ew\x01\x0c\xf95\x96?\x07sb\x92A\x9b\x91\x03\xd7\xb1/K\xc2\xa6\xafR\xc5\xbdw") with the latest version, returning the first one

2025-07-02T01:53:28.834378Z ERROR ThreadId(04) autonomi::client::data_types::pointer: /cargo/registry/src/index.crates.io-1949cf8c6b5b557f/autonomi-0.5.0/src/client/data_types/pointer.rs:288: Failed to update pointer at address aaa518a2cf8260f6bebc769c16b8147ea215adf569696497b7fc1f250823d89a49990187e83fc0f9ae1cf3d44afb7dce to the network: Put verification failed: Peers have conflicting entries for this record: {PeerId("12D3KooWSuTm6wx2myt5BVY6JGXyBZ7eVhYdbeLAub66iKBA5wTV"): Record { key: Key(b"\0\xd5\xa4s\xf9\x10\x9d\xfe\xbd\xdeo\xf6H\x0b_\xee\xf1bX\xa7X\xb4\"9\xe1\xf8;\xd3w\xf9\xc7\xb8"), value: [145, 2, 148, 220, 0, 48, 204, 170, 204, 165, 24, 204, 162, 204, 207, 204, 130, 96, 204, 246, 204, 190, 204, 188, 118, 204, 156, 22, 204, 184, 20, 126, 204, 162, 21, 204, 173, 204, 245, 105, 105, 100, 204, 151, 204, 183, 204, 252, 31, 37, 8, 35, 204, 216, 204, 154, 73, 204, 153, 1, 204, 135, 204, 232, 63, 204, 192, 204, 249, 204, 174, 28, 204, 243, 204, 212, 74, 204, 251, 125, 204, 206, 2, 129, 177, 83, 99, 114, 97, 116, 99, 104, 112, 97, 100, 65, 100, 100, 114, 101, 115, 115, 220, 0, 48, 204, 178, 36, 204, 145, 24, 30, 204, 166, 54, 204, 143, 204, 229, 116, 29, 90, 19, 204, 255, 57, 204, 226, 204, 135, 29, 204, 161, 60, 204, 190, 204, 208, 204, 220, 204, 178, 204, 238, 119, 204, 195, 204, 165, 20, 103, 35, 204, 211, 204, 233, 87, 93, 204, 190, 60, 87, 204, 191, 124, 118, 204, 155, 48, 204, 200, 71, 204, 193, 204, 206, 7, 220, 0, 96, 204, 150, 0, 98, 204, 200, 92, 20, 114, 22, 204, 166, 204, 183, 40, 204, 238, 49, 96, 41, 18, 59, 204, 169, 45, 70, 204, 150, 204, 246, 20, 106, 204, 190, 204, 211, 204, 222, 64, 114, 204, 136, 204, 154, 7, 204, 215, 36, 204, 162, 75, 75, 204, 199, 3, 204, 204, 204, 171, 115, 204, 252, 13, 109, 73, 25, 204, 154, 10, 21, 204, 149, 204, 252, 121, 204, 223, 96, 204, 207, 204, 242, 71, 204, 188, 8, 204, 208, 122, 99, 103, 28, 204, 138, 65, 204, 141, 59, 16, 13, 204, 222, 39, 204, 235, 72, 2, 204, 202, 51, 52, 204, 196, 204, 158, 204, 222, 103, 204, 162, 114, 204, 218, 204, 153, 102, 204, 158, 204, 235, 204, 252, 204, 152, 204, 148, 30, 204, 188, 204, 201], publisher: None, expires: None }, PeerId("12D3KooWLmfdTmmTtKDwcKey28sybA1Kh9SShwAjf84e8pnPsSZT"): Record { key: Key(b"\0\xd5\xa4s\xf9\x10\x9d\xfe\xbd\xdeo\xf6H\x0b_\xee\xf1bX\xa7X\xb4\"9\xe1\xf8;\xd3w\xf9\xc7\xb8"), value: [145, 2, 148, 220, 0, 48, 204, 170, 204, 165, 24, 204, 162, 204, 207, 204, 130, 96, 204, 246, 204, 190, 204, 188, 118, 204, 156, 22, 204, 184, 20, 126, 204, 162, 21, 204, 173, 204, 245, 105, 105, 100, 204, 151, 204, 183, 204, 252, 31, 37, 8, 35, 204, 216, 204, 154, 73, 204, 153, 1, 204, 135, 204, 232, 63, 204, 192, 204, 249, 204, 174, 28, 204, 243, 204, 212, 74, 204, 251, 125, 204, 206, 0, 129, 177, 83, 99, 114, 97, 116, 99, 104, 112, 97, 100, 65, 100, 100, 114, 101, 115, 115, 220, 0, 48, 204, 178, 36, 204, 145, 24, 30, 204, 166, 54, 204, 143, 204, 229, 116, 29, 90, 19, 204, 255, 57, 204, 226, 204, 135, 29, 204, 161, 60, 204, 190, 204, 208, 204, 220, 204, 178, 204, 238, 119, 204, 195, 204, 165, 20, 103, 35, 204, 211, 204, 233, 87, 93, 204, 190, 60, 87, 204, 191, 124, 118, 204, 155, 48, 204, 200, 71, 204, 193, 204, 206, 7, 220, 0, 96, 204, 177, 204, 217, 204, 141, 204, 180, 204, 243, 36, 204, 136, 204, 150, 204, 251, 8, 204, 151, 204, 203, 204, 157, 204, 165, 64, 204, 159, 58, 1, 204, 198, 49, 122, 204, 253, 108, 58, 11, 126, 29, 83, 204, 164, 204, 189, 61, 65, 204, 150, 204, 166, 4, 93, 87, 204, 191, 106, 204, 193, 23, 102, 25, 204, 139, 204, 163, 33, 95, 204, 171, 15, 204, 179, 204, 185, 204, 156, 114, 204, 134, 204, 214, 204, 221, 20, 77, 19, 204, 152, 56, 204, 156, 79, 204, 215, 7, 114, 126, 204, 171, 73, 88, 34, 204, 233, 38, 26, 14, 65, 204, 150, 204, 236, 118, 35, 53, 23, 22, 65, 204, 233, 120, 204, 233, 81, 90, 204, 232, 119, 9, 107, 31, 204, 131, 9], publisher: None, expires: None }}

2025-07-02T01:53:28.834501Z ERROR ThreadId(04) colonylib::pod: /cargo/registry/src/index.crates.io-1949cf8c6b5b557f/colonylib-0.4.3/src/pod.rs:1845: Error occurred: Pointer(PutError(Network { address: NetworkAddress::PointerAddress(aaa518a2cf8260f6bebc769c16b8147ea215adf569696497b7fc1f250823d89a49990187e83fc0f9ae1cf3d44afb7dce) - (acae9b20a0dc6d7da27aa34239d97ee231fd389b014eace164e0171e7fd28969), network_error: PutRecordVerification("Peers have conflicting entries for this record: {PeerId(\"12D3KooWSuTm6wx2myt5BVY6JGXyBZ7eVhYdbeLAub66iKBA5wTV\"): Record { key: Key(b\"\\0\\xd5\\xa4s\\xf9\\x10\\x9d\\xfe\\xbd\\xdeo\\xf6H\\x0b_\\xee\\xf1bX\\xa7X\\xb4\\\"9\\xe1\\xf8;\\xd3w\\xf9\\xc7\\xb8\"), value: [145, 2, 148, 220, 0, 48, 204, 170, 204, 165, 24, 204, 162, 204, 207, 204, 130, 96, 204, 246, 204, 190, 204, 188, 118, 204, 156, 22, 204, 184, 20, 126, 204, 162, 21, 204, 173, 204, 245, 105, 105, 100, 204, 151, 204, 183, 204, 252, 31, 37, 8, 35, 204, 216, 204, 154, 73, 204, 153, 1, 204, 135, 204, 232, 63, 204, 192, 204, 249, 204, 174, 28, 204, 243, 204, 212, 74, 204, 251, 125, 204, 206, 2, 129, 177, 83, 99, 114, 97, 116, 99, 104, 112, 97, 100, 65, 100, 100, 114, 101, 115, 115, 220, 0, 48, 204, 178, 36, 204, 145, 24, 30, 204, 166, 54, 204, 143, 204, 229, 116, 29, 90, 19, 204, 255, 57, 204, 226, 204, 135, 29, 204, 161, 60, 204, 190, 204, 208, 204, 220, 204, 178, 204, 238, 119, 204, 195, 204, 165, 20, 103, 35, 204, 211, 204, 233, 87, 93, 204, 190, 60, 87, 204, 191, 124, 118, 204, 155, 48, 204, 200, 71, 204, 193, 204, 206, 7, 220, 0, 96, 204, 150, 0, 98, 204, 200, 92, 20, 114, 22, 204, 166, 204, 183, 40, 204, 238, 49, 96, 41, 18, 59, 204, 169, 45, 70, 204, 150, 204, 246, 20, 106, 204, 190, 204, 211, 204, 222, 64, 114, 204, 136, 204, 154, 7, 204, 215, 36, 204, 162, 75, 75, 204, 199, 3, 204, 204, 204, 171, 115, 204, 252, 13, 109, 73, 25, 204, 154, 10, 21, 204, 149, 204, 252, 121, 204, 223, 96, 204, 207, 204, 242, 71, 204, 188, 8, 204, 208, 122, 99, 103, 28, 204, 138, 65, 204, 141, 59, 16, 13, 204, 222, 39, 204, 235, 72, 2, 204, 202, 51, 52, 204, 196, 204, 158, 204, 222, 103, 204, 162, 114, 204, 218, 204, 153, 102, 204, 158, 204, 235, 204, 252, 204, 152, 204, 148, 30, 204, 188, 204, 201], publisher: None, expires: None }, PeerId(\"12D3KooWLmfdTmmTtKDwcKey28sybA1Kh9SShwAjf84e8pnPsSZT\"): Record { key: Key(b\"\\0\\xd5\\xa4s\\xf9\\x10\\x9d\\xfe\\xbd\\xdeo\\xf6H\\x0b_\\xee\\xf1bX\\xa7X\\xb4\\\"9\\xe1\\xf8;\\xd3w\\xf9\\xc7\\xb8\"), value: [145, 2, 148, 220, 0, 48, 204, 170, 204, 165, 24, 204, 162, 204, 207, 204, 130, 96, 204, 246, 204, 190, 204, 188, 118, 204, 156, 22, 204, 184, 20, 126, 204, 162, 21, 204, 173, 204, 245, 105, 105, 100, 204, 151, 204, 183, 204, 252, 31, 37, 8, 35, 204, 216, 204, 154, 73, 204, 153, 1, 204, 135, 204, 232, 63, 204, 192, 204, 249, 204, 174, 28, 204, 243, 204, 212, 74, 204, 251, 125, 204, 206, 0, 129, 177, 83, 99, 114, 97, 116, 99, 104, 112, 97, 100, 65, 100, 100, 114, 101, 115, 115, 220, 0, 48, 204, 178, 36, 204, 145, 24, 30, 204, 166, 54, 204, 143, 204, 229, 116, 29, 90, 19, 204, 255, 57, 204, 226, 204, 135, 29, 204, 161, 60, 204, 190, 204, 208, 204, 220, 204, 178, 204, 238, 119, 204, 195, 204, 165, 20, 103, 35, 204, 211, 204, 233, 87, 93, 204, 190, 60, 87, 204, 191, 124, 118, 204, 155, 48, 204, 200, 71, 204, 193, 204, 206, 7, 220, 0, 96, 204, 177, 204, 217, 204, 141, 204, 180, 204, 243, 36, 204, 136, 204, 150, 204, 251, 8, 204, 151, 204, 203, 204, 157, 204, 165, 64, 204, 159, 58, 1, 204, 198, 49, 122, 204, 253, 108, 58, 11, 126, 29, 83, 204, 164, 204, 189, 61, 65, 204, 150, 204, 166, 4, 93, 87, 204, 191, 106, 204, 193, 23, 102, 25, 204, 139, 204, 163, 33, 95, 204, 171, 15, 204, 179, 204, 185, 204, 156, 114, 204, 134, 204, 214, 204, 221, 20, 77, 19, 204, 152, 56, 204, 156, 79, 204, 215, 7, 114, 126, 204, 171, 73, 88, 34, 204, 233, 38, 26, 14, 65, 204, 150, 204, 236, 118, 35, 53, 23, 22, 65, 204, 233, 120, 204, 233, 81, 90, 204, 232, 119, 9, 107, 31, 204, 131, 9], publisher: None, expires: None }}"), payment: None }))