Apologies — Post removed until I can get in touch with @folaht

works nicely on my Mac

one time got this one

infinite-safe-upload.sh>> filename:216470aa5083690c -- 5114434

infinite-safe-upload.sh>> FilesContainer created at: "safe://hyryyryiafsdufnkwxi8nwoptjnzgt6te6rfbmyq1ihpgxhwmswcua4163rnra?v=hnrijhg7qx435iejzzjm1i5oc3qgw3s3b36ej9r7wwe8nmgxjnfey"

+---+------------------+---------------------------------------------------------------------------------------------------------------------------------------------+

| E | 216470aa5083690c | <ClientError: Did not receive sufficient ACK messages from Elders to be sure this cmd (MsgId(f6de..ba09)) passed, expected: 7, received 6.> |

+---+------------------+---------------------------------------------------------------------------------------------------------------------------------------------+

@maidsafe bug or OK?

Thanks for this script it works like a charm, having fun with it!

node-1: 32K total ─╯

node-23: 24K total

node-16: 24K total

node-24: 24K total

node-15: 24K total

node-3: 24K total

node-21: 24K total

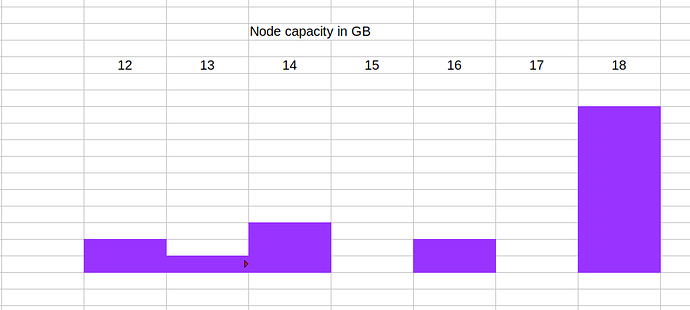

node-9: 12G total

node-22: 13G total

node-11: 14G total

node-5: 18G total

node-7: 14G total

node-6: 18G total

node-12: 16G total

node-18: 16G total

node-8: 18G total

node-19: 12G total

node-4: 18G total

node-2: 18G total

node-10: 18G total

node-17: 14G total

node-13: 18G total

node-20: 18G total

node-14: 18G total

node-25: 18G total

``…this testnet its succesfull yet isnt it? And it cointan or only node that store data? What its next step

12G vs 18G is a surprisingly large spread to me given that max file size is 10 Mb and files should be evenly distributed between nodes by XorName (hash) function.

Is there a known/expected explanation for this? If not, that seems a possible bug and area for investigation.

Remind how these are connected… is there a sense of all connected to all, or grouped? Change over time will be interesting… hope this can stay up for a while.

Edit: just wondering in the extreme there would be alsorts of fluid mechanics that could be parallel to this… viscosity of the movement of data ![]()

Yaaay for fluidics!!!

Did a final year project on fludics 40+ years ago and may have thought about the subject at least twice since.

I have noted similar disparities in adult sizes when running testnets at home with 30+ nodes.

maybe its the “closest to the xor address” that is making these disparities?

Indeed but remember the “avalanche” in hashing - tiny input differences lead to huge output diffs. So if I upload a series of 20 burst photos of a nearly static scene over 2-3 secs I would expect a fairly random distribution of resultant XOR addresses.

Or am i wrong here?

I don’t think you are wrong. My expectation would be that in this test all adults display the same (rounded) size all the time. This is because (a) the files are chunked into pieces of (I think) 1Mb which are supposed to be distributed evenly across nodes and (b) the sizes are being reported rounded to nearest Gb.

So I don’t think this test-case can be considered a PASS until that behavior is achieved. (or someone clearly explains why present behavior is valid/correct.)

12G seems an outlier, others like 24G and 18G are more prevalent and evenly distributed, but perhaps a bug?

True but there are a few at 13 and 14GB as well

The “24” you see is 24k - those are the elders who are too posh to dirty their hands with actually storing the data ![]()

The outlier bug? Lol

Personally, I’m not very surprised at the variance. Just because something is designed to distribute data over many devices doesn’t mean it will end up being evenly distributed all the time. There will be a mystery ‘something’ that is causing it not to be as even as you’d think. It could be slow response from disk writes, congestion on the network for some nodes, a longer route for some nodes, are just some that spring to mind.

Given that the nodes are cloud based and all in one cloud provider it could be something related to that. Imagine if some of the nodes have ended up being in the same rack using the same top of rack switch and SAFE Is trying to use all of the 24 nodes equally but those few in the same rack are saturating that TOR switch and getting poor performance so SAFE backs off from using them as much. Imagine if some of the nodes have ended up in the same rack as another customer’s instances that are using a lot of network bandwidth in the rack. Maybe there’s some network weirdness going on where some of the nodes appear to be more hops away from others and the internet. There’s no telling with all this Cloud stuff!

Ther might be much less variance as the system fills and even the nodes which have a higher ‘cost’ start to become attractive.

hey! I made an addition to your script!

it checks if there is an error in the safe put output and it retries the put!!! so far it works great as after an error it retries until there is no error!

here is the whole script with my modification!

#!/bin/sh

# uncomment the verify_put call below to confirm uploaded data has no errors (untested & probably not necessary).

msg() {

printf '%s\n' "${0##*/}>> ${1}" | tee -a "test.log"

}

verify_put() {

safeContainer=$(grep -o ' | safe://[^ ]*' "${safeOutput}" | grep -o 'safe://[^ ]*')

msg "${safeContainer}"

safe cat ${3} >${1}

[ "$(md5sum ${1})" = "${2}" ] && msg "${3} has good checksum" || "${3} has BAD checksum"

rm ${1}

}

while true

do

filesize=$(shuf -i 10000-9000000 -n 1)

filename=$(cat /dev/urandom | tr -cd 'a-f0-9' | head -c 16)

head -c ${filesize} </dev/urandom >${filename}

checksum=$(md5sum ${filename})

msg "filename:${filename} -- ${filesize}"

errorBool=true

while $errorBool

do

safeOutput="$(safe files put ${filename})"

msg "${safeOutput}"

if echo $safeOutput | grep -q Error; then

echo "Got an error putting: retrying"

else

echo "No error so deleting the file"

errorBool=false

fi

done

rm ${filename}

#verify_put $filename $checksum $safeOutput &

#sleep $(shuf -i 1-10 -n 1)s

done

exit 0

Any chance you and/or @TylerAbeoJordan could add this into safenetforum-community · GitHub please?

DM me your Github usernames so I can send an invite to the safenetforum-community organisation

you mean this overall project “maybe” cant be finished therefore?