Status

We’ve got indexing! We’ve got search! Backend is MVP! Not only that, but colonylib is now being used to power content discovery in Mutant! Here are the details…

colonylib 0.2.0 crate released!

The colonylib crate version 0.2.0 has been uploaded to crates.io! I’ve added a ton of documentation for all of the public facing API’s as well as examples that you can run yourself with the standard cargo run --example framework. There is a full test suite for the internal functions. All tests pass. No errors and no warnings in the library. Docs for the public API can be found on docs.rs. The public facing API includes example code to get you started.

Want to get the full details? Check out the README and try running the examples yourself!

Shoutout to @Champii and Mutant

Before we dig into Colony, I want to recognize @Champii for his contributions to colonylib. We had a nice chat the other day and he introduced me to Augmentcode. He used this tool to build the rough cut for the following features. Instead of me hammering away at this by hand for a week or 2, I just needed to spend a day fixing what the AI agent didn’t understand or broke. @Champii literally told me this tool can take 3 weeks of work and compress it down to one day, he wasn’t kidding! He then plugged colonylib into Mutant to power its content discovery and file sharing mechanism. He built a frontend for colony before I had a chance! And he did all this in just a couple days! Truely amazing work. If you haven’t checked out Mutant, you should!

The best part is that content created on Mutant will be directly portable to Colony or any other frontend that leverages the colonylib library. This is what the semantic web is all about!

SPARQLy graphs!

So what does this new release do? Glad you asked.

Distributed Indexing

Colony at its core leverages a pod which stores metadata about objects stored on the network. Pods can reference each other. So the user, by referencing other users’ pods, can build a graph of all information on the network by recursively walking through all pods they are aware of and storing this index locally on their machine. This operation is done in colonylib by simply calling the refresh_ref() function. Go get a cup of coffee, and watch your index populate with everything it can find.

Fun with RDF

The colonylib library leverages RDF and the oxigraph rust library to build a local disk database. Originally, I thought this would only be needed for search, but what I found is that RDF is a super powerful concept that I leveraged for all sorts of things and coule be used for future applications:

- I built a custom vocabulary for colonylib itself to manage pods, pod references, and pod scratchpads. No extra code required, it just works.

- I split each pod, local and referenced, into their own named graphs with a depth attribute to build in the concept of a ‘web of trust’. The goal here being that nodes with less depth (i.e. less recursion required to reach) will have a higher relevance in search operations. No algorithm required here. Your search results are custom for you based on how close the nodes are to you. Pretty cool right! And this is all just baked into RDF graphs themselves.

- SPARQL queries could be used for graph metrics and visualizations by post processing the output.

- SPARQL queries allow you to INSERT and DELETE graphs and graph entries. This enables pruning the graph locally. Said another way content moderation. I envision groups or users making curated pods with content or even removal of content or pods. The difference here is it is the user’s choice to read in a pruning type pod. So if you want an internet with fluffy kitties and unicorns only, you can do that, or if you want to drink from the raw unfiltered feed of humanity’s online consciousness, you can do that too. But its your choice, no one elses.

The library itself doesn’t care about what RDF schema you use, it is completely agnostic to this. The frontend application might, depending on how it is built. As a recommendation, I would say use schema.org. This is the most complete vocabulary that I know of out there and if applications all use something similar, that makes portability better.

Loading metadata into the pods and graph database uses JSON-LD formatted strings, making it easy to build up metadata information.

Search!!!

And finally we get to search. With index building and all the RDF structure sorted out, it is trivial to search the data. The search() function issues a SPARQL query for you and will search by text, data type, predicate, or even a raw SPARQL query for the adventurous. Results are displayed in standard SPARQL JSON making it easy to plug into off the shelf libraries for parsing.

Collabs update

In addition to Mutant (have you checked this out yet?), I’m also working with the good folks at the SOMA project. They are planning to use colonylib as the backend for key management, file tracking, and search. I’m working with their team of developers so they can leverage this code base as much as possible, meaning their content will be portable as well!

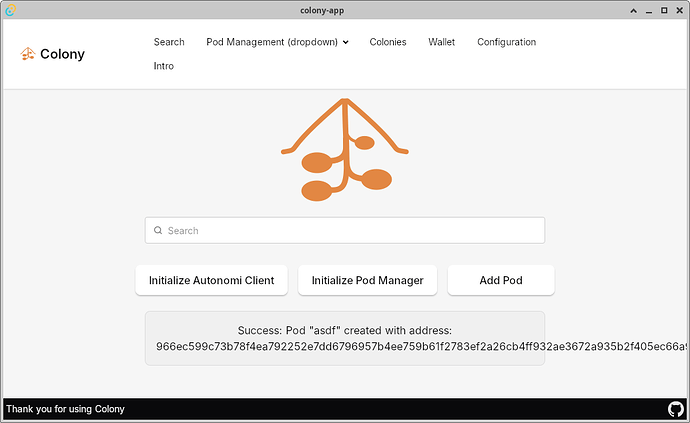

A very Colony vacation

And that concludes day 3 of my very Colony vacation. I’ve got a couple days left and I won’t be slowing down! Maxx has a rough first pass on the Colony GUI, but he hasn’t hooked it up to the backend. My goal this week was to get the backend in a solid place, which I did! So tomorrow I will go back to the Colony repo, merge his edits over, and get the Tauri mappings in place so he can do his JavaScript magic. I’ll need to warn him though, Mutant already has a GUI using the library, so he needs to get busy! Other than that, there are enhancements that should be made, such as dynamic pod sizing (currently each pod can only accomodate 4MB) and threading the Autonomi download operations. Not required for IF, but we’ll want those before this is something I’d be proud to say is 1.0 level quality.

Thank you again backers!

Thank you again to the backers keeping Colony up in the rankings. The hard part is done, now we just need to make it pretty! And to the whales out there with ANT to spare for backing, please sir, can I have some more? After weeks of non stop work, we’re finally able to search Autonomi simply(ish)!!