Seems GitHub is also testing out natural language programming with SpecLang, where the whole program is written in markdown.

Hey @dirvine a question from my buddy working on his own RAG implementation.

“I’m curious what kind of infrastructure is available on the Safe network. How are inference models deployed on the network. If you wanted to deploy a microservice which allowed for querying a vector database, would that be something that could be architected without conventional servers?”

It’s best to imagine autonomi ![]() as private secure hard disk that follows you around devices. For phase I anyway and I don’t want to give much away and steal thunder, but start there. So local models and so on.

as private secure hard disk that follows you around devices. For phase I anyway and I don’t want to give much away and steal thunder, but start there. So local models and so on.

Making that ubiquitous across hardware is essential. Then having autonomi calculate the most effective model for your hardware.

Then RAG/fine tune etc. come later, but maybe not. Large context windows? Continual learning (me back at neuroevolution ideas on open endedness) and more are all happening fast.

In terms of vector database, then similar to the actual models. We need to ensure commonality between models hardware and “personal knowledge”.

By personal knowledge I mean some or all of

- chat history

- conversations

- RAG

- even fine tuned models/

My goal is to enable as opposed to invent here. So let the industry go as fast as it can and make sure there is a SAFE place on Autonomi for whatever personalisation we have and ensure that is independent of hardware (back to login to any computer/phone and it’s your computer/phone).

I changed the topic name and corrected him on brand after the question btw ![]()

![]()

AI you can trust may be a particularly good selling point:

This will be a critical thing. Trust you are private and secure, but also not trust the outputs alone without checking.

One thing I am playing with is agents or AI’s working together.

So 1 answers questions, even single shot badly worded etc. However, the system prompt has a “provide links to sources” addition

Another then checks sources exist and the data is contained there

Another then confirms the answer, and using different colours or something can highlight verified sources and also show opinion or thought.

Hope you don’t mind probing a bit but have you made any AI hires yet?

Also any internal approaches or design choices being available on the forum as soon as they seem solid would really be helpful and appreciated.

Pretty new frontier right now, I know but people used to the conventional will need some Maidsafe’s guidance and inspiration. Thanks David

Not yet, but we iwll for sure.

You are more than welcome

IMO Emad gives one of the best, simple explanations with practical examples for us non techies of how fast things are going, the mental model change required and the need/opportunity for innovators to adapt and engage now. He talks of the need for templates national, industry, regional, citizen … which aligns with our recreation & rapid learning model approach and flow opportunity. Thinking thru a gap analysis to our requirements. Would appreciate your thoughts, insights, intersection points?

https://www.youtube.com/watch?v=ciX_iFGyS0M

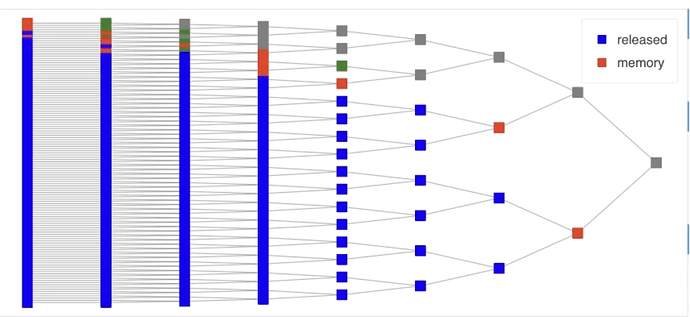

Would be cool if we can create something similar to map-reduce. Let’s say I got a scientific dataset stored across the autonomi network.

Option A would be to load everything into a huge vertical server (beefy memory specs).

Option B would be to make partitions of the data spread across the network. I assume local compute severs will act as a slave and be instructed from a master node on what they should fetch and calculate. Then locally computed aggregates are collected and combined.

It’s how Dask or Hadoop operate and these storages are called RDDs (resilient distributed datasets).

user curated/approved data sources used in the ML processes feeding/tuning the LLM and genAI response to engineered prompts is key imo. What is needed is Some sort of white list feature the user is in control of to properly select the data sources used by ML in the learning and iterative tuning cycles improving the LLM.

ie- For some, using WAPO or NY Times as a data source is a non starter, also many don’t trust ‘parts of Wikipedia’ , etc, so they also need to be able to black list those sites in either a wholesale or selective fashion (news category).

some food for thought

Question: will Autonomi nodes work in the same way or similar to server farms, when running an Autonomi LLM? At the moment we are at least 5 to 10 years away from having actual mass produced AI chips that are designed to run LLM.

Otherwise AI models are run on gaming chips, and there is not enough of them for mass adoption, plus to cool the servers it takes ridiculous amounts of electricity. If Autonomi could some how solve this problem it would be great. Am I wrong?

The alternative to graphics cards is apple hardware. With a macbook you can run 40gb models locally without issues already (6 token per second - so faster than you can read - and about the speed (actually I think it’s faster) of chatgpt when it started)

So that would mean the Autonomi network could run its own native LLM? I’m just trying to understand if there is an advantage to running AI on Autonomi. Thanks

You could fetch your model from autonomi (immutable data - so not altered), have your context (name, age, sex, address, interests, language, family,… Stored in your autonomi account and injected into the local conversation) and run the llm simply locally

So the benefit of local llm and synchronised + encrypted context that makes the llm more useful and comfortable for you

(ofc only for Apple users - not everyone)

Just to mention it - there are many use cases for small llms too (summarising/grepping info from provided context, drafts/answers in simpler language) … And my company laptop comes with a gpu with 6gb ram… So with llms getting better even when small and for certain use cases already it’s not Apple exclusive…

But getting results in very nice language and precise translations etc is currently apple silicon only (or graphics cards in the 5 digit region…) imho

Slurm is used today to per AI workload to grab/reserve some GPU resource as needed for inference tuning and the like one job at a time, but its really heavy in the provisioning sense relying on Kubernetes et al, we see this setup in use at CMU and Uni. of Pitt.

with dstack emerging (TU Munich) as a much lighter weight provisioning system w/o Kubernetes (about 71MBytes for dstack server), so in theory its possible for an aspiring Autonomi Network developer to take the latter dstack and run it on your system then check price of available GPUs of Systems wanting to chare their local GPU

to create a swarm of resources to run the job,

At our little company we are looking at how to do that with NUCs and their integrated GPUs running Linux Ubuntu 24.04 that are cheap and have an integrated GPU from Intel in our two unit lab case, they are just i7 12thgen 32GB RAM (capable of 64GB RAM) in Intel CORE form with one TB capable of 2TB MVEM SSD, so low resting power if the embedded Intel GPU kicks in the draw will likely go up north of 110 Watt

We are looking at how to create a CPU and GPU swam generally and setup the ephemeral Storage volumes needed etc , so in practice a user wanting to run an AI workload specs their needs via dstack advertises to those flagged as resource renters, and then they give their price to the swarm desired which is an aggregated price from those nodes having available

resource in selling GPU resource

nb- there are new NUCs emerging with ability to add in a beefier GPU, with overall low power consumption, say under 150W peak… , My cohort is at CES next week in LV checking out the new stuff…

Again in theory one could also implement BitNet’s 1.58bit Ternary -1/0/+1 Matrix Math and use dstack (modified) to do the provisioning of an AI job to this supposed NUC+GPU SWARM one sets up in a very distributed dynamic way,

to do inference and training work on specific ‘Lean’ LM models that would fit… in the rented NUC+GPU SWARM…

It’s a pipe dream right now for sure, but an interesting one ![]()

And we await DAVE to find out more about cli level API stuff on the client side as well.

What would be helpful for ![]() is an open protocol for announcing services on

is an open protocol for announcing services on ![]() for discovery … Then people can set up specialized nodes that perform services for a fee (

for discovery … Then people can set up specialized nodes that perform services for a fee (![]() -tokens). Once we have something like this in place, then people could set up LLM’s as a service on the network. Many other CPU/GPU intensive apps as well as oracles could be set up with such a protocol too.

-tokens). Once we have something like this in place, then people could set up LLM’s as a service on the network. Many other CPU/GPU intensive apps as well as oracles could be set up with such a protocol too.

Or is the idea more that an individual could run an AI app that stores its data on Autonomi?