What’s up with the title? you might say. I was about to come up with a more descriptive and informative title. I had a vague memory of having prefixed titles here on the forum with GitHub: [app name] for posts about apps, that also had code. This was several years ago when I wrote the first apps for Autonomi. I think it was meant to show that there is code for this thing. I squinted at it now and thought, yeah I think that’s the impression I still get.. So, there it is.

Now that IF is over things will change slightly on the Ryyn side.

It was early this winter that I started thinking about writing some kind of application running on Autonomi, directing focus here again after quite some time. It became clear to me pretty soon what it would be; it’s very similar to what I built to run on the network several years ago (virtual filesystem, database etc). And the reason is still the same; that’s what I want and have needed for years. It is such a PITA and annoyance with backups, or just my data that I want also in my phone and on device a, b, c… and just trying to keep one’s digital things in one place, accessible without worry, without lots of maintenance and fiddling. And I want it to be mine of course, and private when I wish so. I know many share the experience! Even though I’m technical enough to work with these things, it’s just immensely frustrating to me, I do not want to spend time on it (hours here and there). I’d rather spend years building a better solution

I happened to see this IF thing about a week or so before the applications closed, and I was doubtful until the very last if I should join in. I had looked forward to the quiet work in anonymity, and only announcing something when there was something really solid to use. So, that didn’t happen. There were pros and cons with participating, some input was gotten from the community - that is and will always be very valuable - but I did rush some development as well, causing a bit of technical debt. Actually though, I find that there are some good advancements done also when you’ve got pressure on you - you can find out some class of things that you wouldn’t have otherwise. But it’s not good for code quality in the long run (but the reality for like.. 99% of all development?). So, really, I don’t know for sure either way in this case. But I was thinking along the way that Ryyn wasn’t really something for IF. There is no marketing going to be done. There is no selling, no making money on it. It’s just (going to be) there for anyone to use.

During IF I did largely stick to my anticipated “work in peace and quiet”, that to the outside may have looked like nothing much happening at all. And on the usability front that was pretty much the case! The work was (and is) focused on the foundation, and finding and discovering the best designs of components, design of the system itself, problem framing and the actual conceptual model. That model changed, mostly from distributed backup/virtual file system over some intermediate undefined states into what it is now (which doesn’t have a good name, but I’ll include an overview below). The conceptual model is quite firmly grounded now (but not entirely unfolded). However I see different concepts branching off of the same solutions. But not for a while.. and maybe not by me.

This application, with the ambitions I have, is a long-haul project. Most work has been, and continues to be very complex, to make the system simple - simple to understand, simple to use and simple to maintain (and of course, that’s a target no-one reaches fully - a mirage?). It will take a lot of time to get to where I want it to be. There is so much worked on that no user will ever think about, just to allow that very thing: for them to never think about it.

There is a similar application that is a somewhat suitable comparison, for grasping scale; Syncthing (github).

I’m impressed by the user adoption they have and the long time they’ve kept up the work. They are an excellent scale reference, with 11 years of work (at least?) and hundreds of contributors (a handful larger ones, and a major champ). Most of their work was done early on though, but I’m sure the longevity has depended on the ongoing work.

We’re building somewhat different things, but I think the scale is not widely off. I have high ambitions for this application. I’m confident that I can build something simpler, with better features, using less code. (I also have the advantage that I may learn from their mistakes.) Plenty could say the same - given enough time hard things become doable. But it is also the case that I am not constricting this to any particular timeline. I will do this simply. As long as Autonomi keeps developing and becoming more and more usable, then I will get my device sync built so I can rest in peace with my data. (I’m of course joking a bit.) It’s a huge motivator that this is something many both want and need, and it is a great satisfaction to be able to do something for others - you know, something that actually matters.

Anyway, I think all that would mean that there will be no more IF things for me, since I won’t start building another app, and as I understood the same application can’t participate twice. So I may not put that much effort for a while in involving others in the progress. It takes a lot more time than it would seem (to me at least) to produce material like tutorials, user docs etc. But that does not mean that things are not moving. My language will be commits. It takes a while to learn it, but I will be telling you all a lot about progress that way  (and occasional notes here when something lands)

(and occasional notes here when something lands)

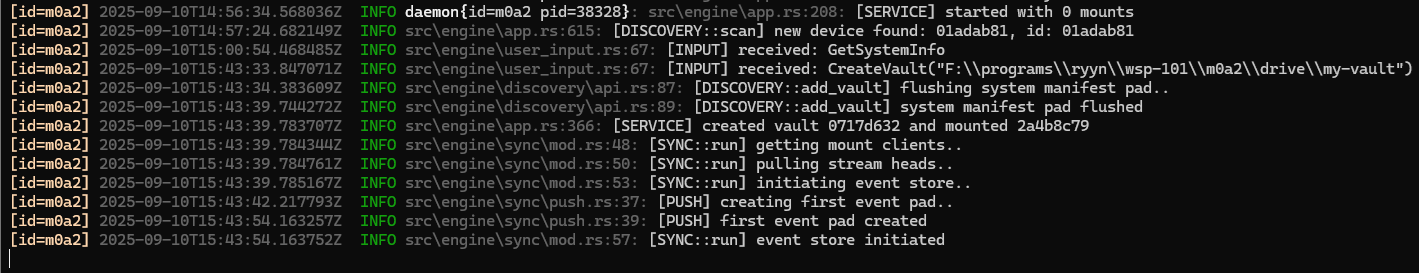

But! I will include an overview of the conceptual model now - already implemented in code - below this post. This overview is not perfect, consistent, complete or finished, because this as well takes ages to write up (for me at least).

I will always answer questions, or help out if there are issues using the application. So just shoot, whenever.

![]()

![]()